7-Main_NLP_tasks-3-Translation

中英文对照学习,效果更佳!

原课程链接:https://huggingface.co/course/chapter7/4?fw=pt

Translation

翻译

Let’s now dive into translation. This is another sequence-to-sequence task, which means it’s a problem that can be formulated as going from one sequence to another. In that sense the problem is pretty close to summarization, and you could adapt what we will see here to other sequence-to-sequence problems such as:

问一个问题在可乐中打开在工作室实验室中打开现在让我们深入到翻译。这是另一个序列到序列的任务,这意味着这是一个可以被表述为从一个序列到另一个序列的问题。从这个意义上讲,这个问题非常接近于总结,您可以将我们在这里看到的内容应用于其他顺序到顺序的问题,例如:

- Style transfer: Creating a model that translates texts written in a certain style to another (e.g., formal to casual or Shakespearean English to modern English)

- Generative question answering: Creating a model that generates answers to questions, given a context

If you have a big enough corpus of texts in two (or more) languages, you can train a new translation model from scratch like we will in the section on causal language modeling. It will be faster, however, to fine-tune an existing translation model, be it a multilingual one like mT5 or mBART that you want to fine-tune to a specific language pair, or even a model specialized for translation from one language to another that you want to fine-tune to your specific corpus.

风格转换:创建一个将某种风格的文本翻译成另一种风格的模型(例如,正式到随意或莎士比亚式的英语到现代英语)生成性问答:创建一个模型,在给定的上下文中生成问题的答案如果你有足够大的两种(或更多)语言的文本语料库,你可以从头开始训练一个新的翻译模型,就像我们在因果语言建模一节中所做的那样。然而,对现有的翻译模型进行微调会更快,无论是想要微调到特定语言对的MT5或mBART这样的多语言模型,还是想要微调到特定语料库的专门用于从一种语言翻译到另一种语言的模型。

In this section, we will fine-tune a Marian model pretrained to translate from English to French (since a lot of Hugging Face employees speak both those languages) on the KDE4 dataset, which is a dataset of localized files for the KDE apps. The model we will use has been pretrained on a large corpus of French and English texts taken from the Opus dataset, which actually contains the KDE4 dataset. But even if the pretrained model we use has seen that data during its pretraining, we will see that we can get a better version of it after fine-tuning.

在这一部分中,我们将在KDE4数据集上微调一个经过预先培训的Mario模型,将其从英语翻译成法语(因为许多Hugging Face员工都会说这两种语言),KDE4数据集是KDE应用程序的本地化文件的数据集。我们将使用的模型已经在取自Opus数据集的大型法语和英语文本语料库上进行了预训练,其中实际上包含KDE4数据集。但是,即使我们使用的预训练模型在预训练期间看到了这些数据,我们也会看到,经过微调后,我们可以得到更好的版本。

Once we’re finished, we will have a model able to make predictions like this one:

一旦我们完成了,我们将拥有一个能够做出如下预测的模型:

As in the previous sections, you can find the actual model that we’ll train and upload to the Hub using the code below and double-check its predictions here.

One-用于回答问题的热编码标签。One-用于回答问题的热编码标签。与前面的部分一样,您可以找到我们将使用以下代码训练并上传到Hub的实际模型,并在此处仔细检查其预测。

Preparing the data

准备数据

To fine-tune or train a translation model from scratch, we will need a dataset suitable for the task. As mentioned previously, we’ll use the KDE4 dataset in this section, but you can adapt the code to use your own data quite easily, as long as you have pairs of sentences in the two languages you want to translate from and into. Refer back to [Chapter 5] if you need a reminder of how to load your custom data in a Dataset.

要从头开始微调或训练翻译模型,我们需要一个适合该任务的数据集。如前所述,我们将在本节中使用KDE4数据集,但您可以非常容易地调整代码以使用您自己的数据,只要您有两种语言的句子对,您就可以将它们翻译成两种语言。如果您需要提示如何在Dataset中加载您的自定义数据,请参阅第5章。

The KDE4 dataset

KDE4数据集

As usual, we download our dataset using the load_dataset() function:

像往常一样,我们使用Load_DataSet()函数下载我们的数据集:

1 | |

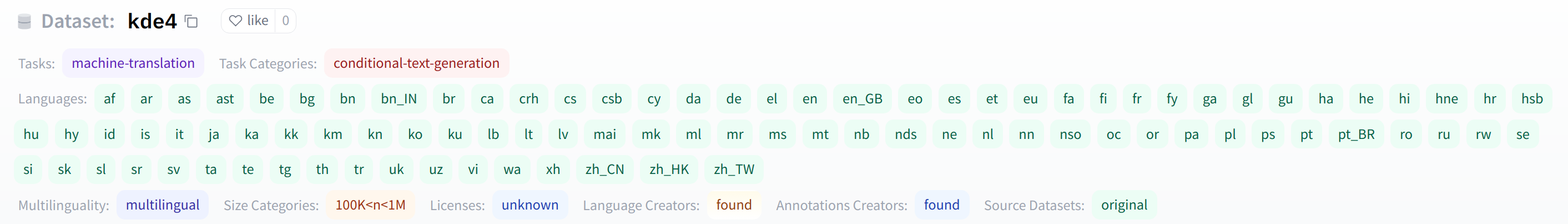

If you want to work with a different pair of languages, you can specify them by their codes. A total of 92 languages are available for this dataset; you can see them all by expanding the language tags on its dataset card.

如果您想使用一对不同的语言,您可以通过它们的代码指定它们。此数据集共有92种语言可用;您可以通过展开其数据集卡上的语言标记来查看所有语言。

Let’s have a look at the dataset:

可用于KDE4数据集的语言。让我们来看看这个数据集:

1 | |

1 | |

We have 210,173 pairs of sentences, but in one single split, so we will need to create our own validation set. As we saw in [Chapter 5], a Dataset has a train_test_split() method that can help us. We’ll provide a seed for reproducibility:

我们有210,173对句子,但在一个单独的拆分中,所以我们将需要创建我们自己的验证集。正如我们在第5章中所看到的,Dataset有一个Train_TestSplit()方法可以帮助我们。我们将为可再生性提供种子:

1 | |

1 | |

We can rename the "test" key to "validation" like this:

我们可以将“test”密钥重命名为“Validation”,如下所示:

1 | |

Now let’s take a look at one element of the dataset:

现在,我们来看一下数据集的一个元素:

1 | |

1 | |

We get a dictionary with two sentences in the pair of languages we requested. One particularity of this dataset full of technical computer science terms is that they are all fully translated in French. However, French engineers are often lazy and leave most computer science-specific words in English when they talk. Here, for instance, the word “threads” might well appear in a French sentence, especially in a technical conversation; but in this dataset it has been translated into the more correct “fils de discussion.” The pretrained model we use, which has been pretrained on a larger corpus of French and English sentences, takes the easier option of leaving the word as is:

我们得到了一本词典,里面有我们要求的两种语言的两个句子。这个充满计算机科学专业术语的数据集的一个特殊之处在于,它们都被完整地翻译成法语。然而,法国工程师经常懒惰,在交谈时会留下大多数计算机科学专用的英语单词。例如,在这里,“线索”一词很可能出现在法语句子中,特别是在技术对话中;但在这个数据集中,它被翻译成更正确的“Fils de Disputation”。我们使用的预训练模型已经在更大的法语和英语句子语料库上进行了预训练,它采用了更容易的选择,即保持单词的原样:

1 | |

1 | |

Another example of this behavior can be seen with the word “plugin,” which isn’t officially a French word but which most native speakers will understand and not bother to translate.

In the KDE4 dataset this word has been translated in French into the more official “module d’extension”:

这种行为的另一个例子可以在单词“plugin”中看到,这个词不是正式的法语单词,但大多数母语为英语的人都会理解,不会费心翻译。在KDE4数据集中,这个词已用法语翻译成更正式的“模块扩展”:

1 | |

1 | |

Our pretrained model, however, sticks with the compact and familiar English word:

然而,我们预先训练的模型坚持使用紧凑而熟悉的英语单词:

1 | |

1 | |

It will be interesting to see if our fine-tuned model picks up on those particularities of the dataset (spoiler alert: it will).

看看我们的微调模型是否会注意到数据集的这些特殊性将是一件有趣的事情(剧透提醒:它会的)。

✏️ Your turn! Another English word that is often used in French is “email.” Find the first sample in the training dataset that uses this word. How is it translated? How does the pretrained model translate the same English sentence?

✏️轮到你了!另一个经常在法语中使用的英语单词是“电子邮件”。在训练数据集中找到使用此单词的第一个样本。它是怎么翻译的?经过预先训练的模型如何翻译相同的英语句子?

Processing the data

正在处理数据

You should know the drill by now: the texts all need to be converted into sets of token IDs so the model can make sense of them. For this task, we’ll need to tokenize both the inputs and the targets. Our first task is to create our tokenizer object. As noted earlier, we’ll be using a Marian English to French pretrained model. If you are trying this code with another pair of languages, make sure to adapt the model checkpoint. The Helsinki-NLP organization provides more than a thousand models in multiple languages.

现在您应该知道了:所有文本都需要转换为令牌ID集,这样模型才能理解它们。对于这项任务,我们需要对输入和目标进行标记化。我们的第一个任务是创建我们的tokenizer对象。如前所述,我们将使用玛丽安英语到法语的预训模型。如果您正在尝试使用另一种语言编写此代码,请确保调整模型检查点。赫尔辛基-NLP组织提供了1000多种语言版本的车型。

1 | |

You can also replace the model_checkpoint with any other model you prefer from the Hub, or a local folder where you’ve saved a pretrained model and a tokenizer.

您还可以从Hub或保存预训练模型和标记器的本地文件夹中,用您喜欢的任何其他模型替换`Model_Checkpoint‘。

💡 If you are using a multilingual tokenizer such as mBART, mBART-50, or M2M100, you will need to set the language codes of your inputs and targets in the tokenizer by setting tokenizer.src_lang and tokenizer.tgt_lang to the right values.

💡如果您使用的是多语言标记器,如mBART、mBART-50或M2M100,则需要在标记器中设置输入和目标的语言代码,方法是将tokenizer.src_lang和tokenizer.tgt_lang设置为正确的值。

The preparation of our data is pretty straightforward. There’s just one thing to remember; you need to ensure that the tokenizer processes the targets in the output language (here, French). You can do this by passing the targets to the text_targets argument of the tokenizer’s __call__ method.

我们的数据准备工作相当简单。只有一件事需要记住;您需要确保记号赋值器处理输出语言(这里是法语)的目标。可以通过将目标传递给记号赋值器的__call__方法的Text_Targets参数来实现。

To see how this works, let’s process one sample of each language in the training set:

为了了解它是如何工作的,让我们处理训练集中每种语言的一个样本:

1 | |

1 | |

As we can see, the output contains the input IDs associated with the English sentence, while the IDs associated with the French one are stored in the labels field. If you forget to indicate that you are tokenizing labels, they will be tokenized by the input tokenizer, which in the case of a Marian model is not going to go well at all:

正如我们所看到的,输出包含与英语句子关联的输入ID,而与法语句子关联的ID存储在Labels字段中。如果您忘记指出您正在对标签进行标记化,则输入标记器将对它们进行标记化,在使用MARIAN模型的情况下,这一点也不会很顺利:

1 | |

1 | |

As we can see, using the English tokenizer to preprocess a French sentence results in a lot more tokens, since the tokenizer doesn’t know any French words (except those that also appear in the English language, like “discussion”).

正如我们所看到的,使用英语标记器对法语句子进行预处理会产生更多的标记词,因为标记器不知道任何法语单词(除了那些也出现在英语中的单词,如“讨论”)。

Since inputs is a dictionary with our usual keys (input IDs, attention mask, etc.), the last step is to define the preprocessing function we will apply on the datasets:

由于inputs是一个字典,其中包含我们常用的关键字(输入ID、注意掩码等),因此最后一步是定义我们将应用于数据集的预处理函数:

1 | |

Note that we set the same maximum length for our inputs and outputs. Since the texts we’re dealing with seem pretty short, we use 128.

请注意,我们为输入和输出设置了相同的最大长度。因为我们处理的文本似乎很短,所以我们使用128。

💡 If you are using a T5 model (more specifically, one of the t5-xxx checkpoints), the model will expect the text inputs to have a prefix indicating the task at hand, such as translate: English to French:.

💡如果您使用的是T5模型(更具体地说,是t5-xxx检查点之一),该模型将期望文本输入有一个前缀来指示手头的任务,例如翻译:英语到法语:。

⚠️ We don’t pay attention to the attention mask of the targets, as the model won’t expect it. Instead, the labels corresponding to a padding token should be set to -100 so they are ignored in the loss computation. This will be done by our data collator later on since we are applying dynamic padding, but if you use padding here, you should adapt the preprocessing function to set all labels that correspond to the padding token to -100.

⚠️我们不会注意目标的注意面具,因为模型不会预料到这一点。相反,填充令牌对应的标签应该设置为-100,以便在损耗计算中忽略它们。这将由我们的数据整理程序稍后完成,因为我们正在应用动态填充,但如果您在这里使用填充,您应该调整预处理函数,将与填充令牌对应的所有标签设置为-100。

We can now apply that preprocessing in one go on all the splits of our dataset:

现在,我们可以对数据集的所有拆分一次性应用该预处理:

1 | |

Now that the data has been preprocessed, we are ready to fine-tune our pretrained model!

既然数据已经进行了预处理,我们就可以微调我们预先训练好的模型了!

Fine-tuning the model with the Trainer API

使用Traine接口对模型进行微调

The actual code using the Trainer will be the same as before, with just one little change: we use a Seq2SeqTrainer here, which is a subclass of Trainer that will allow us to properly deal with the evaluation, using the generate() method to predict outputs from the inputs. We’ll dive into that in more detail when we talk about the metric computation.

使用Traine的实际代码将与前面相同,只有一个小小的变化:我们在这里使用了一个Seq2SeqTraine,它是Traine的子类,它允许我们正确地处理计算,使用Generate()方法来预测来自输入的输出。我们将在讨论度量计算时更详细地讨论这一点。

First things first, we need an actual model to fine-tune. We’ll use the usual AutoModel API:

首先,我们需要一个实际的模型来进行微调。我们将使用常用的AutoModel接口:

1 | |

Note that this time we are using a model that was trained on a translation task and can actually be used already, so there is no warning about missing weights or newly initialized ones.

请注意,这一次我们使用的是针对翻译任务训练的模型,并且实际上已经可以使用,因此不会出现有关丢失权重或新初始化权重的警告。

Data collation

数据整理

We’ll need a data collator to deal with the padding for dynamic batching. We can’t just use a DataCollatorWithPadding like in [Chapter 3] in this case, because that only pads the inputs (input IDs, attention mask, and token type IDs). Our labels should also be padded to the maximum length encountered in the labels. And, as mentioned previously, the padding value used to pad the labels should be -100 and not the padding token of the tokenizer, to make sure those padded values are ignored in the loss computation.

我们需要一个数据校验器来处理动态批处理的填充。在本例中,我们不能只使用第三章中的DataCollatorWithPadding,因为它只填充输入(输入ID、注意力掩码和令牌类型ID)。我们的标签也应该填充到标签中遇到的最大长度。如前所述,用于填充标签的填充值应该是`-100‘,而不是令牌器的填充标记,以确保在损失计算中忽略那些填充值。

This is all done by a DataCollatorForSeq2Seq. Like the DataCollatorWithPadding, it takes the tokenizer used to preprocess the inputs, but it also takes the model. This is because this data collator will also be responsible for preparing the decoder input IDs, which are shifted versions of the labels with a special token at the beginning. Since this shift is done slightly differently for different architectures, the DataCollatorForSeq2Seq needs to know the model object:

这都是由一个DataCollatorForSeq2Seq完成的。与DataCollatorWithPadding一样,它接受用于对输入进行预处理的tokenizer,但它也接受mod。这是因为该数据校验器还将负责准备解码器输入ID,其是在开头具有特殊标记的标签的移位版本。由于不同架构的移位方式略有不同,因此DataCollatorForSeq2Seq需要知道Model对象:

1 | |

To test this on a few samples, we just call it on a list of examples from our tokenized training set:

为了在几个样本上测试它,我们只需在我们的标记化训练集中的一个示例列表上调用它:

1 | |

1 | |

We can check our labels have been padded to the maximum length of the batch, using -100:

我们可以使用-100来检查我们的标签是否填充到了批次的最大长度:

1 | |

1 | |

And we can also have a look at the decoder input IDs, to see that they are shifted versions of the labels:

我们还可以查看解码器输入的ID,可以看到它们是标签的移位版本:

1 | |

1 | |

Here are the labels for the first and second elements in our dataset:

下面是我们的数据集中第一个和第二个元素的标签:

1 | |

1 | |

We will pass this data_collator along to the Seq2SeqTrainer. Next, let’s have a look at the metric.

我们会将这个data_Collator传递给Seq2SeqTraine。接下来,让我们来看看这个指标。

Metrics

量度

The feature that Seq2SeqTrainer adds to its superclass Trainer is the ability to use the generate() method during evaluation or prediction. During training, the model will use the decoder_input_ids with an attention mask ensuring it does not use the tokens after the token it’s trying to predict, to speed up training. During inference we won’t be able to use those since we won’t have labels, so it’s a good idea to evaluate our model with the same setup.

`Seq2SeqTraine在其超类Traine中增加的功能是能够在评估或预测时使用Generate()方法。在训练期间,该模型将使用带有注意力掩码的decder_input_ids‘,以确保它不会在它试图预测的令牌之后使用这些令牌,以加快训练速度。在推断期间,我们将不能使用这些,因为我们将没有标签,所以使用相同的设置来评估我们的模型是一个好主意。

As we saw in Chapter 1, the decoder performs inference by predicting tokens one by one — something that’s implemented behind the scenes in 🤗 Transformers by the generate() method. The Seq2SeqTrainer will let us use that method for evaluation if we set predict_with_generate=True.

正如我们在第1章中看到的,解码器通过一个接一个地预测令牌来执行推理-这是在生成Transformer中通过🤗()‘方法在幕后实现的。如果我们设置了Forecast_With_Generate=True,那么Seq2SeqTraine`将允许我们使用该方法进行计算。

The traditional metric used for translation is the BLEU score, introduced in a 2002 article by Kishore Papineni et al. The BLEU score evaluates how close the translations are to their labels. It does not measure the intelligibility or grammatical correctness of the model’s generated outputs, but uses statistical rules to ensure that all the words in the generated outputs also appear in the targets. In addition, there are rules that penalize repetitions of the same words if they are not also repeated in the targets (to avoid the model outputting sentences like "the the the the the") and output sentences that are shorter than those in the targets (to avoid the model outputting sentences like "the").

翻译使用的传统衡量标准是BLEU评分,这是基肖尔·帕皮内尼等人在2002年的一篇文章中引入的。BLEU的得分评估了翻译与其标签的距离有多近。它不衡量模型生成的输出的可理解性或语法正确性,而是使用统计规则来确保生成的输出中的所有单词也出现在目标中。此外,还有一些规则规定,如果相同的单词没有在目标中重复(以避免模型输出类似于the‘的句子),并且输出比目标中的句子更短的句子(以避免模型输出类似于“the”`的句子),则对相同单词的重复进行处罚。

One weakness with BLEU is that it expects the text to already be tokenized, which makes it difficult to compare scores between models that use different tokenizers. So instead, the most commonly used metric for benchmarking translation models today is SacreBLEU, which addresses this weakness (and others) by standardizing the tokenization step. To use this metric, we first need to install the SacreBLEU library:

BLEU的一个弱点是,它预计文本已经被标记化,这使得使用不同标记器的模型之间的分数很难比较。因此,如今用于对翻译模型进行基准测试的最常用的度量标准是Sacrebleu,它通过标准化标记化步骤来解决这一弱点(以及其他问题)。要使用此指标,我们首先需要安装Sacrebleu库:

1 | |

We can then load it via evaluate.load() like we did in [Chapter 3]:

然后我们可以通过valuate.load()加载它,就像我们在第3章中所做的那样:

1 | |

This metric will take texts as inputs and targets. It is designed to accept several acceptable targets, as there are often multiple acceptable translations of the same sentence — the dataset we’re using only provides one, but it’s not uncommon in NLP to find datasets that give several sentences as labels. So, the predictions should be a list of sentences, but the references should be a list of lists of sentences.

这一指标将把文本作为输入和目标。它的设计是为了接受几个可接受的目标,因为同一句子通常有多个可接受的翻译–我们使用的数据集只提供一个,但在NLP中,找到给出几个句子作为标签的数据集并不少见。所以,预测应该是一个句子列表,但引用应该是一个句子列表。

Let’s try an example:

让我们来看一个例子:

1 | |

1 | |

This gets a BLEU score of 46.75, which is rather good — for reference, the original Transformer model in the “Attention Is All You Need” paper achieved a BLEU score of 41.8 on a similar translation task between English and French! (For more information about the individual metrics, like counts and bp, see the SacreBLEU repository.) On the other hand, if we try with the two bad types of predictions (lots of repetitions or too short) that often come out of translation models, we will get rather bad BLEU scores:

这得到了BLEU的46.75分,相当不错–作为参考,《注意力就是你所需要的》论文中的原版Transformer模型在类似的英法互译任务中取得了BLEU的41.8分!(有关Counts和bp等单个指标的更多信息,请参阅sarebleu存储库。)另一方面,如果我们尝试翻译模型中经常出现的两种糟糕的预测类型(大量重复或太短),我们将得到相当糟糕的BLEU分数:

1 | |

1 | |

1 | |

1 | |

The score can go from 0 to 100, and higher is better.

分数可以从0到100,越高越好。

To get from the model outputs to texts the metric can use, we will use the tokenizer.batch_decode() method. We just have to clean up all the -100s in the labels (the tokenizer will automatically do the same for the padding token):

要将模型输出转换为指标可以使用的文本,我们将使用tokenizer.Batch_decode()方法。我们只需要清理标签中的所有`-100(标记器会自动对填充令牌执行相同的操作):

1 | |

Now that this is done, we are ready to fine-tune our model!

现在,我们已经准备好微调我们的模型了!

Fine-tuning the model

微调模型

The first step is to log in to Hugging Face, so you’re able to upload your results to the Model Hub. There’s a convenience function to help you with this in a notebook:

第一步是登录Hugging Face,这样你就可以将结果上传到Model Hub。笔记本中有一个方便的功能可以帮助您完成此操作:

1 | |

This will display a widget where you can enter your Hugging Face login credentials.

这将显示一个小部件,您可以在其中输入您的Hugging Face登录凭据。

If you aren’t working in a notebook, just type the following line in your terminal:

如果您不是在笔记本电脑上工作,只需在您的终端中键入以下行:

1 | |

Once this is done, we can define our Seq2SeqTrainingArguments. Like for the Trainer, we use a subclass of TrainingArguments that contains a few more fields:

一旦完成,我们就可以定义我们的Seq2SeqTrainingArguments。与Traine类似,我们使用了TrainingArguments的子类,它包含更多的字段:

1 | |

Apart from the usual hyperparameters (like learning rate, number of epochs, batch size, and some weight decay), here are a few changes compared to what we saw in the previous sections:

除了常见的超参数(如学习速率、历元数、批次大小和一些权重衰减)外,以下是与我们在前几节中看到的相比的一些变化:

- We don’t set any regular evaluation, as evaluation takes a while; we will just evaluate our model once before training and after.

- We set

fp16=True, which speeds up training on modern GPUs. - We set

predict_with_generate=True, as discussed above. - We use

push_to_hub=Trueto upload the model to the Hub at the end of each epoch.

Note that you can specify the full name of the repository you want to push to with the hub_model_id argument (in particular, you will have to use this argument to push to an organization). For instance, when we pushed the model to the huggingface-course organization, we added hub_model_id="huggingface-course/marian-finetuned-kde4-en-to-fr" to Seq2SeqTrainingArguments. By default, the repository used will be in your namespace and named after the output directory you set, so in our case it will be "sgugger/marian-finetuned-kde4-en-to-fr" (which is the model we linked to at the beginning of this section).

我们不设置任何常规评估,因为评估需要一段时间;我们只会在培训之前和培训后评估我们的模型一次。我们设置了fp16=True,这可以加快现代GU上的培训。我们设置了Forecast_With_Generate=True,如上所述。我们使用Push_to_Hub=True在每个纪元结束时将模型上传到Hub。请注意,您可以使用Hub_Model_id参数指定要推送到的存储库的全名(特别是,您必须使用此参数才能推送到组织)。例如,当我们将模型推送到HuggingFace-Course组织时,我们将hub_model_id=“huggingface-course/marian-finetuned-kde4-en-to-fr”添加到Seq2SeqTrainingArguments中。默认情况下,使用的存储库将位于您的命名空间中,并以您设置的输出目录命名,因此在我们的示例中,它将是“sgugger/maran-finetuned-kde4-en-to-fr”(这是我们在本节开头链接到的模型)。

💡 If the output directory you are using already exists, it needs to be a local clone of the repository you want to push to. If it isn’t, you’ll get an error when defining your Seq2SeqTrainer and will need to set a new name.

💡如果您正在使用的输出目录已经存在,则它需要是您要推送到的存储库的本地克隆。如果不是,则在定义您的Seq2SeqTraine时会出现错误,需要设置一个新名称。

Finally, we just pass everything to the Seq2SeqTrainer:

最后,我们只需将所有内容传递给Seq2SeqTraine:

1 | |

Before training, we’ll first look at the score our model gets, to double-check that we’re not making things worse with our fine-tuning. This command will take a bit of time, so you can grab a coffee while it executes:

在培训之前,我们将首先查看我们的模型获得的分数,以再次检查我们的微调是否没有使情况变得更糟。此命令需要一些时间,因此您可以在执行该命令时喝杯咖啡:

1 | |

1 | |

A BLEU score of 39 is not too bad, which reflects the fact that our model is already good at translating English sentences to French ones.

BLEU的分数39分并不算太差,这反映出我们的模式已经擅长将英语句子翻译成法语句子。

Next is the training, which will also take a bit of time:

接下来是培训,也需要一点时间:

1 | |

Note that while the training happens, each time the model is saved (here, every epoch) it is uploaded to the Hub in the background. This way, you will be able to to resume your training on another machine if necessary.

请注意,在进行训练时,每次保存模型(这里是每个时期)时,都会将其上载到后台的Hub。这样,如果需要,您将能够在另一台计算机上恢复您的训练。

Once training is done, we evaluate our model again — hopefully we will see some amelioration in the BLEU score!

一旦训练完成,我们将再次评估我们的模型–希望我们能在BLEU的分数上看到一些改善!

1 | |

1 | |

That’s a nearly 14-point improvement, which is great.

这几乎提高了14个百分点,这是很棒的。

Finally, we use the push_to_hub() method to make sure we upload the latest version of the model. The Trainer also drafts a model card with all the evaluation results and uploads it. This model card contains metadata that helps the Model Hub pick the widget for the inference demo. Usually, there is no need to say anything as it can infer the right widget from the model class, but in this case, the same model class can be used for all kinds of sequence-to-sequence problems, so we specify it’s a translation model:

最后,我们使用ush_to_Hub()方法来确保我们上传了模型的最新版本。“火车”还起草了一张包含所有评估结果的模型卡,并将其上传。此模型卡包含帮助Model Hub为推理演示挑选小部件的元数据。通常不需要说什么,因为它可以从模型类推断出正确的小部件,但在这种情况下,同一个模型类可以用于所有类型的序列到序列的问题,所以我们指定它是一个翻译模型:

1 | |

This command returns the URL of the commit it just did, if you want to inspect it:

如果您要检查它,此命令将返回它刚刚执行的提交的URL:

1 | |

At this stage, you can use the inference widget on the Model Hub to test your model and share it with your friends. You have successfully fine-tuned a model on a translation task — congratulations!

在此阶段,您可以使用Model Hub上的推理小部件来测试您的模型并将其与您的朋友共享。您已经成功地对翻译任务中的模型进行了微调-祝贺您!

If you want to dive a bit more deeply into the training loop, we will now show you how to do the same thing using 🤗 Accelerate.

如果您想更深入地了解训练循环,我们现在将向您展示如何使用🤗Accelerate来做同样的事情。

A custom training loop

定制培训循环

Let’s now take a look at the full training loop, so you can easily customize the parts you need. It will look a lot like what we did in section 2 and Chapter 3.

现在让我们来看看完整的训练循环,这样您就可以轻松地定制所需的部件。它看起来很像我们在第2节和第3章中所做的。

Preparing everything for training

为训练做好一切准备

You’ve seen all of this a few times now, so we’ll go through the code quite quickly. First we’ll build the DataLoaders from our datasets, after setting the datasets to the "torch" format so we get PyTorch tensors:

您现在已经见过这一切几次了,所以我们将非常快速地浏览一下代码。首先,我们将从数据集构建DataLoader‘s,在将数据集设置为“Torch”`格式之后,我们将获得PyTorch张量:

1 | |

Next we reinstantiate our model, to make sure we’re not continuing the fine-tuning from before but starting from the pretrained model again:

接下来,我们重新实例化我们的模型,以确保我们不会继续之前的微调,而是再次从预先训练的模型开始:

1 | |

Then we will need an optimizer:

然后我们需要一个优化器:

1 | |

Once we have all those objects, we can send them to the accelerator.prepare() method. Remember that if you want to train on TPUs in a Colab notebook, you will need to move all of this code into a training function, and that shouldn’t execute any cell that instantiates an Accelerator.

一旦我们拥有了所有这些对象,我们就可以将它们发送到accelerator.preparate()方法。请记住,如果您想要在Colab笔记本中训练TPU,则需要将所有这些代码移到一个训练函数中,并且该函数不应该执行任何实例化`Accelerator‘的单元格。

1 | |

Now that we have sent our train_dataloader to accelerator.prepare(), we can use its length to compute the number of training steps. Remember we should always do this after preparing the dataloader, as that method will change the length of the DataLoader. We use a classic linear schedule from the learning rate to 0:

既然我们已经将Train_dataloader发送到了accelerator.preparate(),我们就可以使用它的长度来计算训练步数了。请记住,我们应该始终在准备DataLoader之后执行此操作,因为该方法将更改DataLoader的长度。我们使用从学习率到0的经典线性时间表:

1 | |

Lastly, to push our model to the Hub, we will need to create a Repository object in a working folder. First log in to the Hugging Face Hub, if you’re not logged in already. We’ll determine the repository name from the model ID we want to give our model (feel free to replace the repo_name with your own choice; it just needs to contain your username, which is what the function get_full_repo_name() does):

最后,为了将我们的模型推送到Hub,我们需要在一个工作文件夹中创建一个Repository对象。首先登录Hugging Face中心,如果你还没有登录的话。我们将根据我们想要为我们的模型提供的模型ID来确定存储库名称(您可以随意将repo_name替换为您自己的选择;它只需要包含您的用户名,这就是函数get_Full_repo_name()所做的):

1 | |

1 | |

Then we can clone that repository in a local folder. If it already exists, this local folder should be a clone of the repository we are working with:

然后,我们可以在本地文件夹中克隆该存储库。如果它已经存在,则此本地文件夹应该是我们正在使用的存储库的克隆:

1 | |

We can now upload anything we save in output_dir by calling the repo.push_to_hub() method. This will help us upload the intermediate models at the end of each epoch.

现在,我们可以通过调用repo.ush_to_Hub()方法上传保存在out_dir中的任何内容。这将帮助我们在每个时代结束时上传中间模型。

Training loop

训练循环

We are now ready to write the full training loop. To simplify its evaluation part, we define this postprocess() function that takes predictions and labels and converts them to the lists of strings our metric object will expect:

我们现在已经准备好编写完整的训练循环。为了简化其求值部分,我们定义了这个postprocess()函数,该函数接受预测和标签,并将它们转换为我们的metric对象期望的字符串列表:

1 | |

The training loop looks a lot like the ones in section 2 and [Chapter 3], with a few differences in the evaluation part — so let’s focus on that!

培训循环看起来很像第2节和第3章中的循环,但在评估部分有一些不同-所以让我们重点关注这一点!

The first thing to note is that we use the generate() method to compute predictions, but this is a method on our base model, not the wrapped model 🤗 Accelerate created in the prepare() method. That’s why we unwrap the model first, then call this method.

首先要注意的是,我们使用🤗()方法来计算预测,但这是在我们的基本模型上使用的方法,而不是在preparate()方法中创建的包装模型生成的生成加速。这就是我们首先解开模型,然后调用此方法的原因。

The second thing is that, like with token classification, two processes may have padded the inputs and labels to different shapes, so we use accelerator.pad_across_processes() to make the predictions and labels the same shape before calling the gather() method. If we don’t do this, the evaluation will either error out or hang forever.

第二件事是,与令牌分类一样,两个进程可能已经将输入和标签填充到不同的形状,因此在调用ather()方法之前,我们使用accelerator.pad_cross_Proceses()来进行预测和标记相同的形状。如果我们不这样做,评估要么出错,要么永远挂起。

1 | |

1 | |

Once this is done, you should have a model that has results pretty similar to the one trained with the Seq2SeqTrainer. You can check the one we trained using this code at huggingface-course/marian-finetuned-kde4-en-to-fr-accelerate. And if you want to test out any tweaks to the training loop, you can directly implement them by editing the code shown above!

一旦这样做了,你应该有一个模型,它的结果与用Seq2SeqTraine训练的模型非常相似。您可以在huggingface-course/marian-finetuned-kde4-en-to-fr-accelerate.上查看我们使用此代码训练的代码如果您想测试对训练循环的任何调整,您可以通过编辑上面显示的代码直接实现它们!

Using the fine-tuned model

使用微调的模型

We’ve already shown you how you can use the model we fine-tuned on the Model Hub with the inference widget. To use it locally in a pipeline, we just have to specify the proper model identifier:

我们已经向您展示了如何将我们在Model Hub上微调的模型与推理小部件一起使用。要在Pipeline中本地使用它,我们只需指定正确的型号标识:

1 | |

1 | |

As expected, our pretrained model adapted its knowledge to the corpus we fine-tuned it on, and instead of leaving the English word “threads” alone, it now translates it to the French official version. It’s the same for “plugin”:

正如预期的那样,我们的预先训练的模型将其知识适应我们对其进行了微调的语料库,并且它现在将其翻译成法语官方版本,而不是单独保留英语单词“线程”。《插件》也是一样:

1 | |

1 | |

Another great example of domain adaptation!

领域适应的另一个很好的例子!

✏️ Your turn! What does the model return on the sample with the word “email” you identified earlier?

✏️轮到你了!该模型在带有您前面标识的单词“Email”的示例上返回了什么?