L11-Unit_8-Part_1_Proximal_Policy_Optimization_(PPO)-C2-Introducing_the_Clipped_Surrogate_Objective_Function

中英文对照学习,效果更佳!

原课程链接:https://huggingface.co/deep-rl-course/unit2/bellman-equation?fw=pt

Introducing the Clipped Surrogate Objective Function

引入截断的代理目标函数

Recap: The Policy Objective Function

概述:政策目标职能

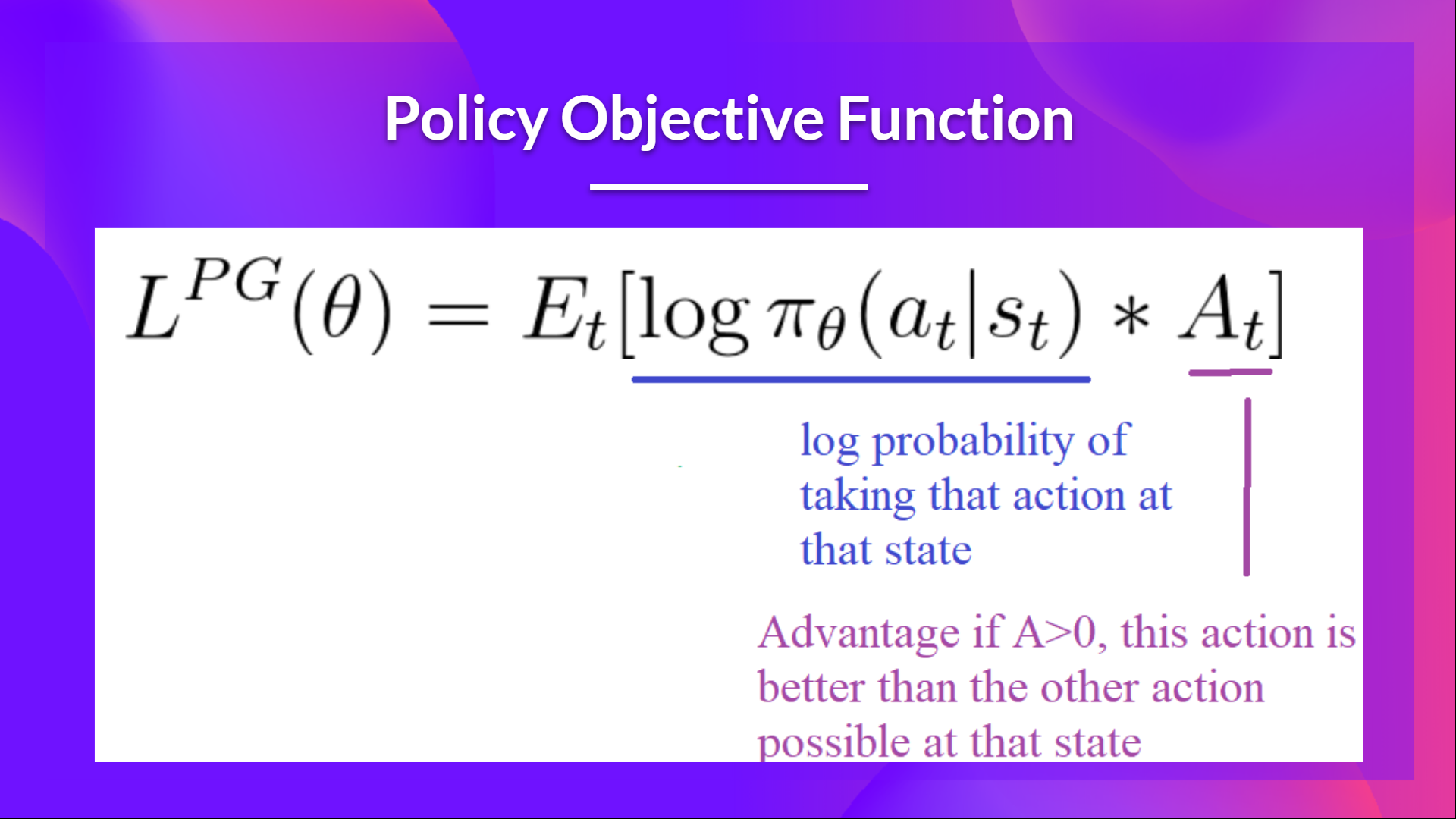

Let’s remember what is the objective to optimize in Reinforce:

让我们记住在增强中优化的目标是什么:

The idea was that by taking a gradient ascent step on this function (equivalent to taking gradient descent of the negative of this function), we would push our agent to take actions that lead to higher rewards and avoid harmful actions.

强化的想法是,通过对该函数采取梯度上升步骤(相当于采用该函数的负值的梯度下降),我们将推动我们的代理采取导致更高回报的行动,并避免有害行动。

However, the problem comes from the step size:

然而,问题出在步长上:

- Too small, the training process was too slow

- Too high, there was too much variability in the training

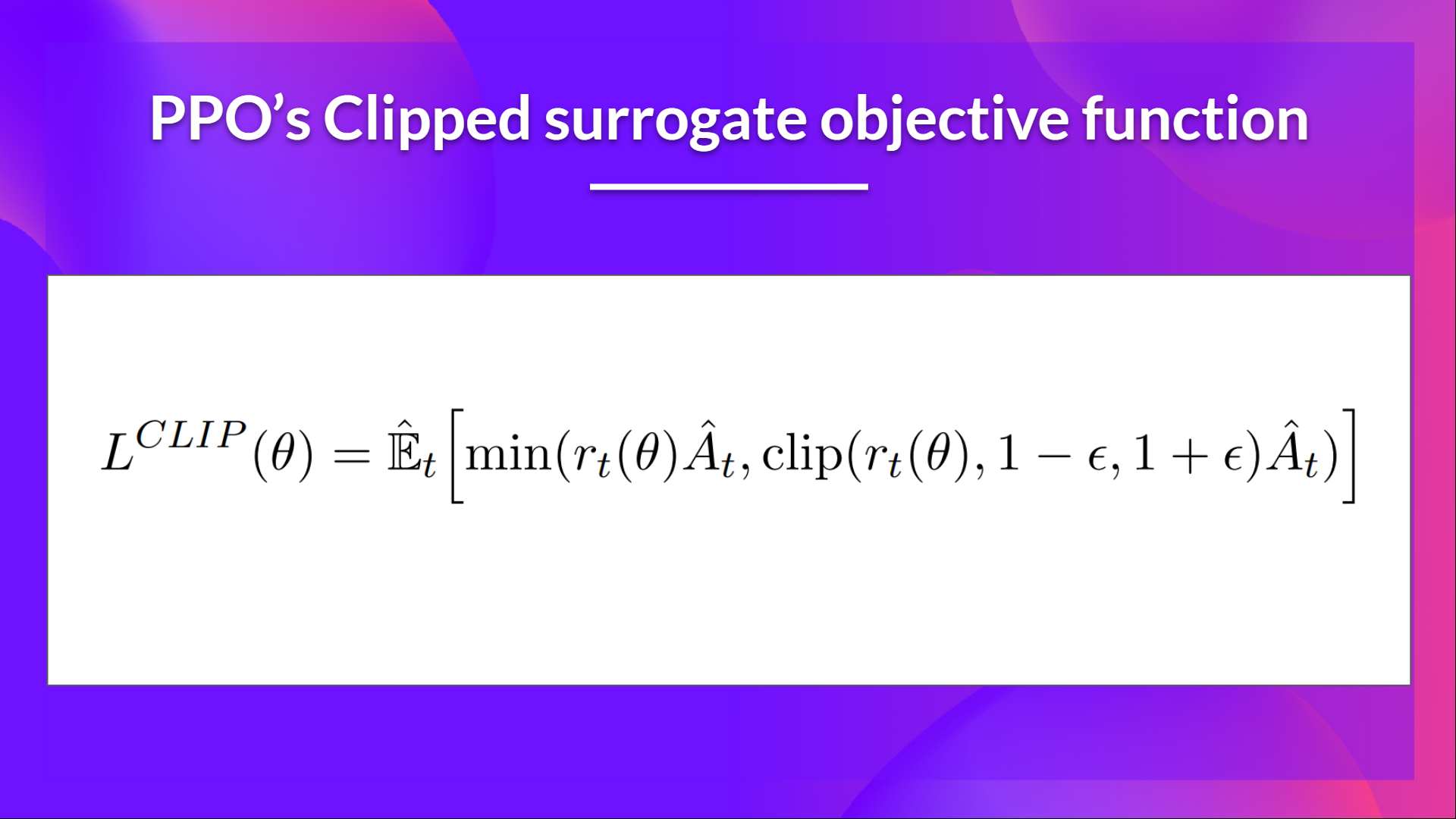

Here with PPO, the idea is to constrain our policy update with a new objective function called the Clipped surrogate objective function that will constrain the policy change in a small range using a clip.

太小,训练过程太慢太高,训练中有太多的可变性在PPO中,我们的想法是使用一个称为裁剪代理目标函数的新目标函数来约束我们的政策更新,该目标函数将使用裁剪在小范围内约束政策变化。

This new function is designed to avoid destructive large weights updates :

这一新功能旨在避免破坏性的大权重更新:

Let’s study each part to understand how it works.

PPO代理函数让我们研究每个部分,以了解它是如何工作的。

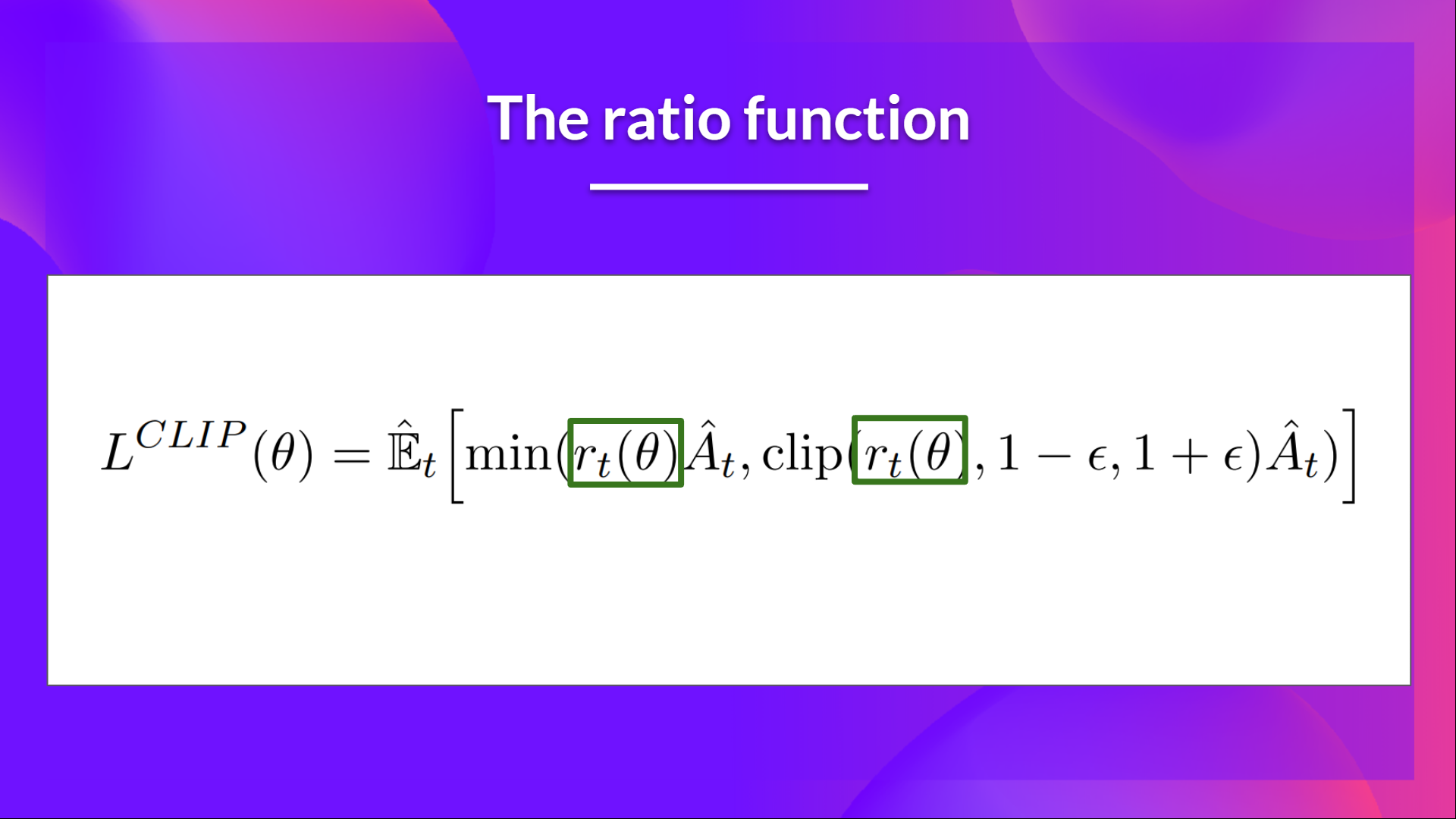

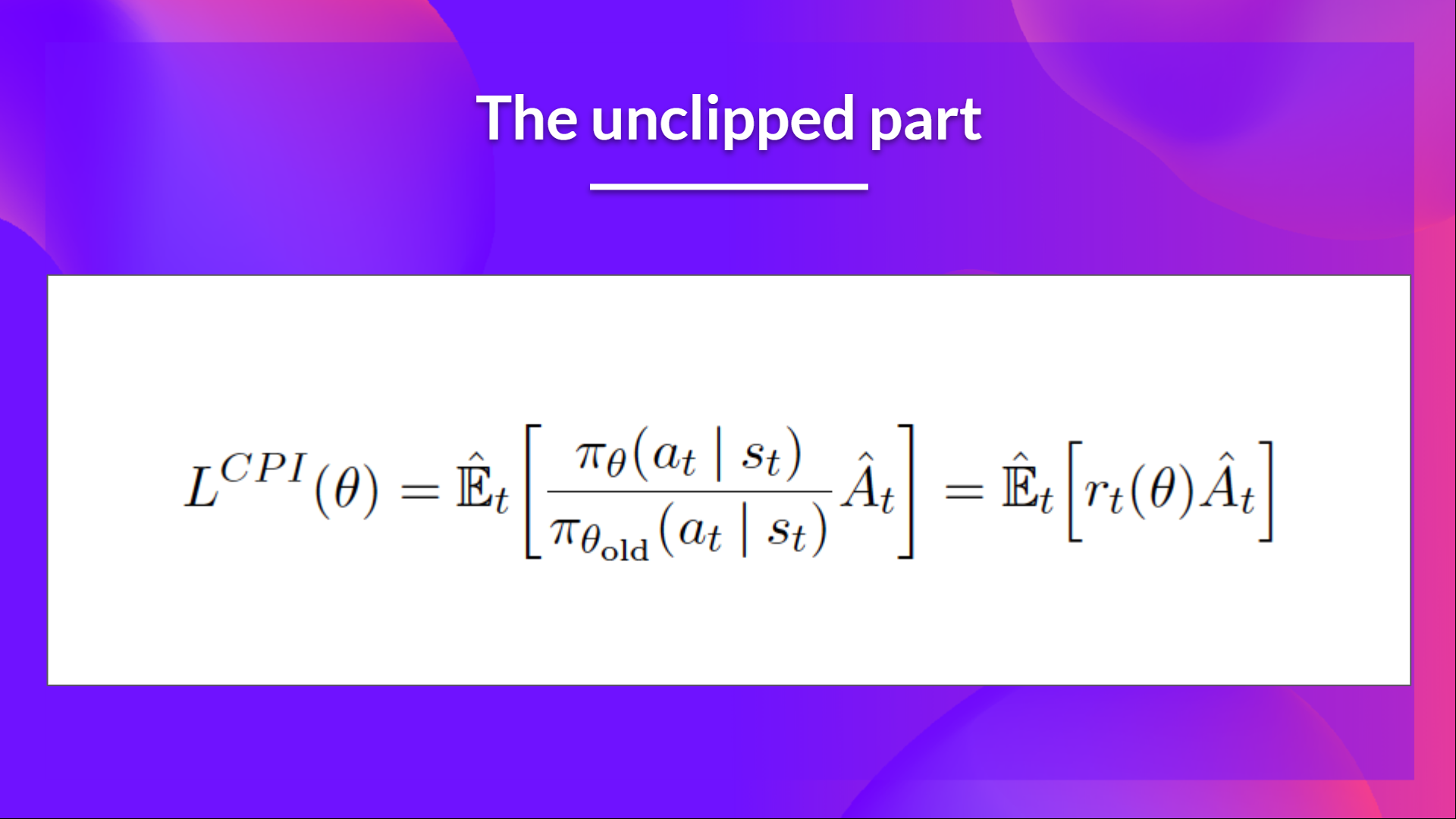

The Ratio Function

比率函数

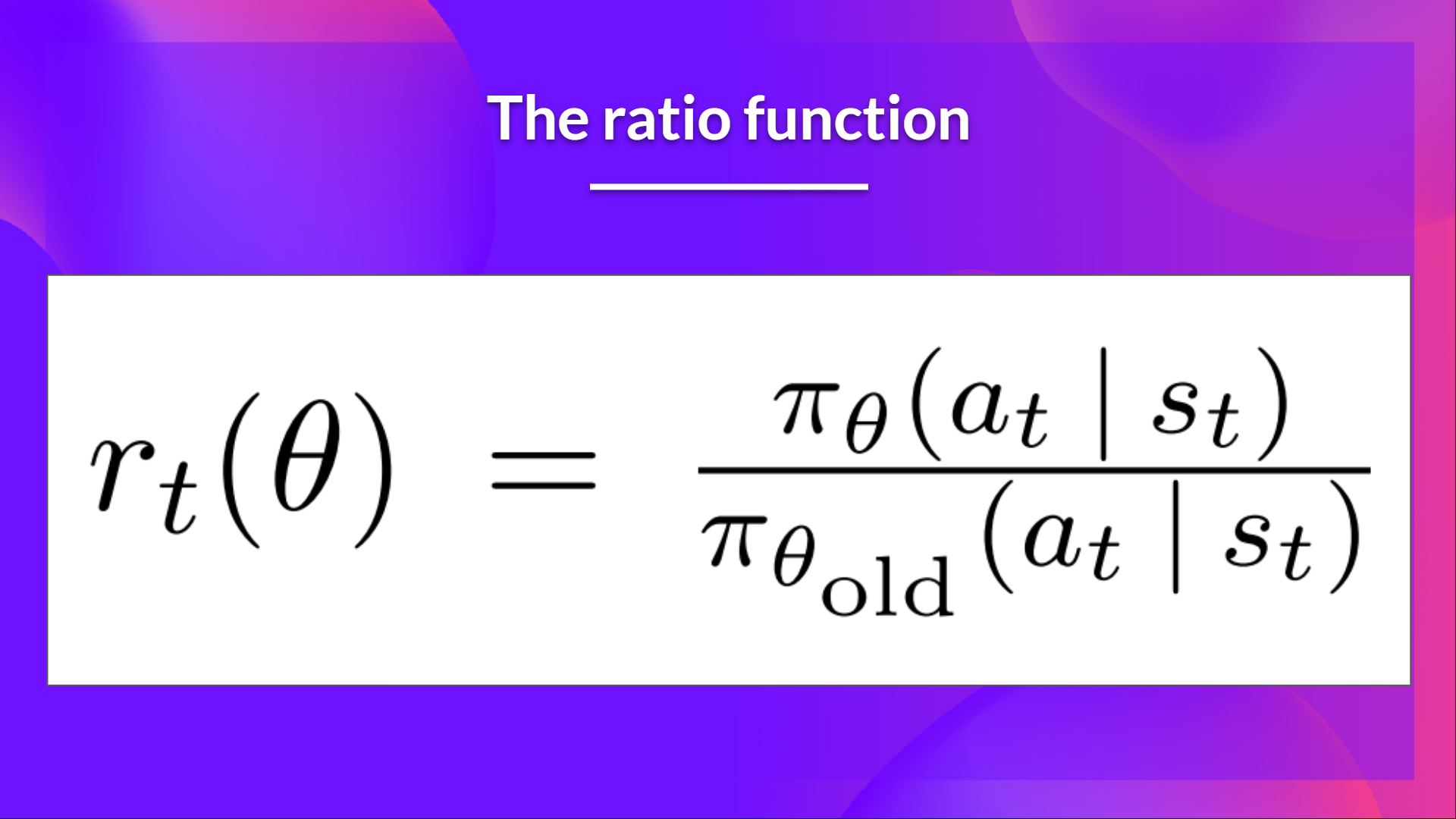

This ratio is calculated this way:

比率此比率按如下方式计算:

It’s the probability of taking action at a_t at at state st s_t st in the current policy divided by the previous one.

比率它是在当前策略中的状态st s_t st在状态a_t采取行动的概率除以前一个策略。

As we can see, rt(θ) r_t(\theta) rt(θ) denotes the probability ratio between the current and old policy:

如我们所见,RT(Theta)r_t(\)RTθ(θ)表示当前策略和旧策略之间的概率比:

- If rt(θ)>1 r_t(\theta) > 1 rt(θ)>1, the action at a_t at at state st s_t st is more likely in the current policy than the old policy.

- If rt(θ) r_t(\theta) rt(θ) is between 0 and 1, the action is less likely for the current policy than for the old one.

So this probability ratio is an easy way to estimate the divergence between old and current policy.

如果RT()>1 r_t(\theta)>1 RT()>1,则在状态st s_t stθ的a_t处的操作在当前策略中比旧策略中更有可能发生;如果RT(θ)r_t(\theta)RT(θ)介于0和1之间,则当前策略中该操作的可能性小于旧策略。因此,该概率比是一种简单地估计旧策略和当前策略之间差异的方法。

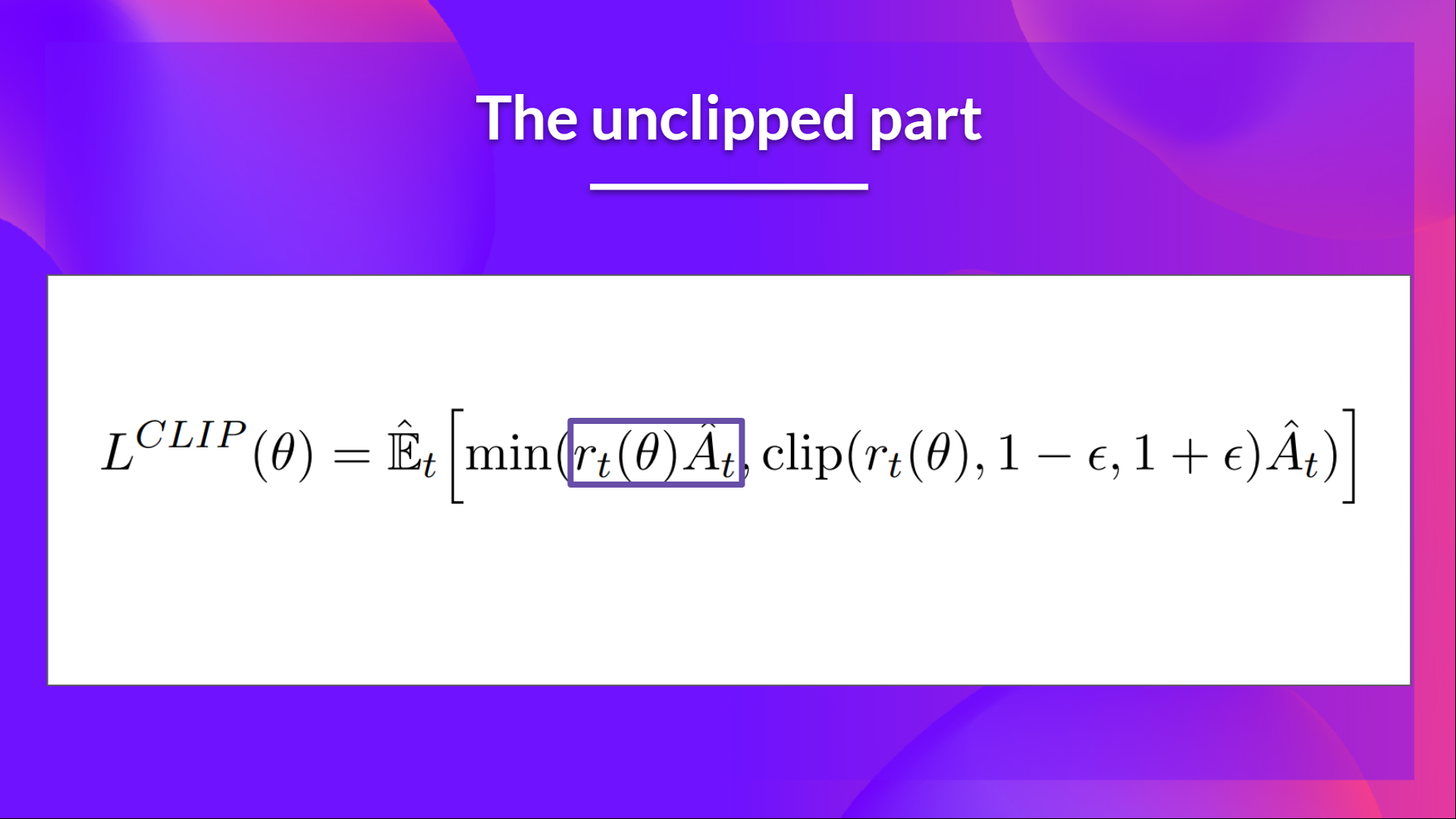

The unclipped part of the Clipped Surrogate Objective function

被剪裁的代理项目标函数的未剪裁部分

This ratio can replace the log probability we use in the policy objective function. This gives us the left part of the new objective function: multiplying the ratio by the advantage.

PPO这个比率可以取代我们在政策目标函数中使用的对数概率。这给了我们新目标函数的左侧部分:将比率乘以优势。

Proximal Policy Optimization Algorithms

However, without a constraint, if the action taken is much more probable in our current policy than in our former, this would lead to a significant policy gradient step and, therefore, an excessive policy update.

PPO近似式策略优化算法然而,如果没有限制,如果在当前策略中采取的操作比在以前策略中更有可能,这将导致显著的策略梯度步骤,因此策略更新过多。

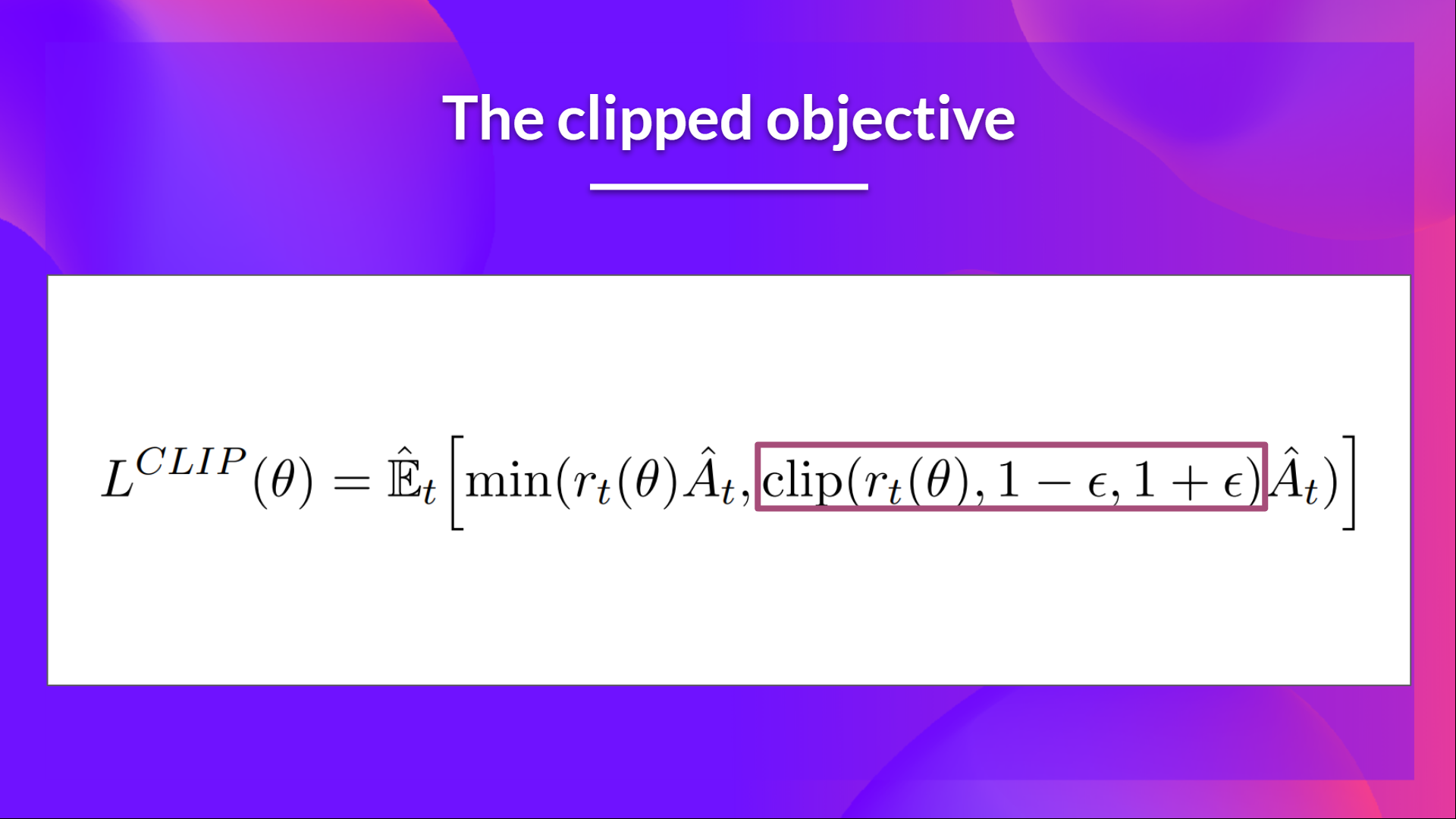

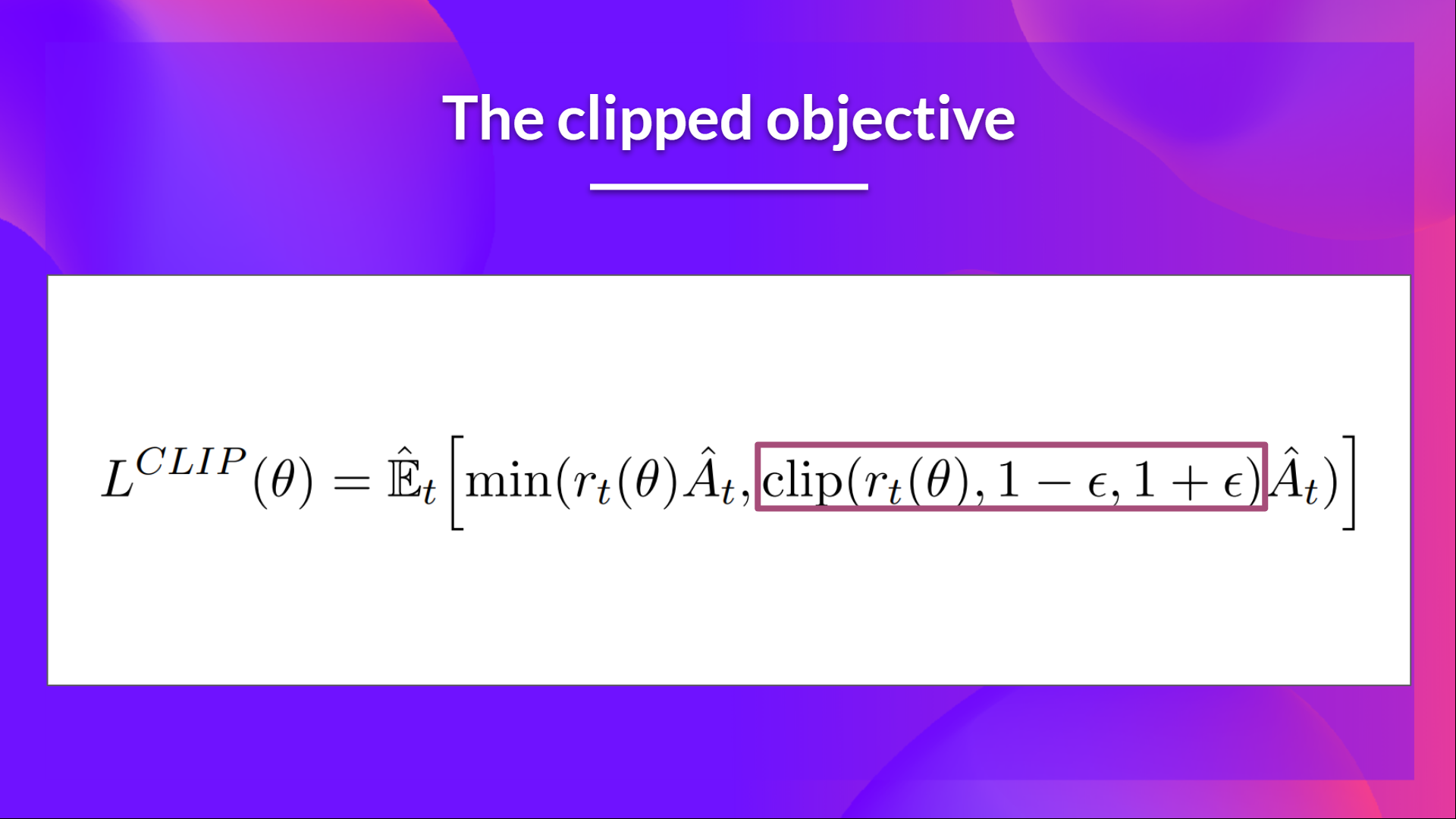

The clipped Part of the Clipped Surrogate Objective function

被剪裁的代理目标函数的剪裁部分

Consequently, we need to constrain this objective function by penalizing changes that lead to a ratio away from 1 (in the paper, the ratio can only vary from 0.8 to 1.2).

因此,我们需要通过惩罚导致比率偏离1的变化来约束这一目标函数(在本文中,比率只能从0.8到1.2变化)。

By clipping the ratio, we ensure that we do not have a too large policy update because the current policy can’t be too different from the older one.

通过削减比率,我们确保不会有太大的策略更新,因为当前策略不能与旧策略有太大差异。

To do that, we have two solutions:

要做到这一点,我们有两种解决方案:

- TRPO (Trust Region Policy Optimization) uses KL divergence constraints outside the objective function to constrain the policy update. But this method is complicated to implement and takes more computation time.

- PPO clip probability ratio directly in the objective function with its Clipped surrogate objective function.

This clipped part is a version where rt(theta) is clipped between [1−ϵ,1+ϵ] [1 - \epsilon, 1 + \epsilon] [1−ϵ,1+ϵ].

TRPO(信任域策略优化)使用目标函数外的KL发散度约束来约束策略更新。但这种方法实现复杂,计算时间较长。PPO直接在目标函数中裁剪概率比及其裁剪的代理目标函数。PPO这个裁剪部分是RT(Theta)在[1−ϵ,1+ϵ][1-\ϵ,1+\epsilon][1−ϵ,1+ϵ]之间裁剪的版本。

With the Clipped Surrogate Objective function, we have two probability ratios, one non-clipped and one clipped in a range (between [1−ϵ,1+ϵ] [1 - \epsilon, 1 + \epsilon] [1−ϵ,1+ϵ], epsilon is a hyperparameter that helps us to define this clip range (in the paper ϵ=0.2 \epsilon = 0.2 ϵ=0.2.).

有了裁剪的代理目标函数,我们有两个概率比,一个未裁剪的和一个裁剪的在一个范围内(介于[1−ϵ,1+ϵ][1-\ϵ,1+\epsilon][1−ϵ,1+ϵ],epsilon是一个超参数,它帮助我们定义这个裁剪范围(在论文中,ϵ=0.2\epsilon=0.2ϵ=0.2)。)

Then, we take the minimum of the clipped and non-clipped objective, so the final objective is a lower bound (pessimistic bound) of the unclipped objective.

然后,我们取已裁剪和未裁剪的目标的最小值,因此最终目标是未裁剪的目标的下界(悲观界)。

Taking the minimum of the clipped and non-clipped objective means we’ll select either the clipped or the non-clipped objective based on the ratio and advantage situation.

取剪裁目标和非剪裁目标的最小值意味着我们将根据比率和优势情况来选择剪裁目标或非剪裁目标。