K10-Unit_7-Introduction_to_Multi_Agents_and_AI_vs_AI-D3-Self_Play

中英文对照学习,效果更佳!

原课程链接:https://huggingface.co/deep-rl-course/unitbonus1/play?fw=pt

Self-Play: a classic technique to train competitive agents in adversarial games

自我发挥:在对抗性游戏中训练竞争性代理人的经典技巧

Now that we studied the basics of multi-agents. We’re ready to go deeper. As mentioned in the introduction, we’re going to train agents in an adversarial game with SoccerTwos, a 2vs2 game.

现在我们学习了多智能体的基础知识。我们已经准备好进行更深入的研究。正如介绍中提到的,我们将在与SoccerTwos的对抗性游戏中训练代理,这是一款2vs2的游戏。

This environment was made by the Unity MLAgents Team

SoccerTwos这个环境是由Unity MLAgents团队制作的

What is Self-Play?

什么是自我发挥?

Training agents correctly in an adversarial game can be quite complex.

在对抗性游戏中正确地训练代理人可能是相当复杂的。

On the one hand, we need to find how to get a well-trained opponent to play against your training agent. And on the other hand, even if you have a very good trained opponent, it’s not a good solution since how your agent is going to improve its policy when the opponent is too strong?

一方面,我们需要找到一个训练有素的对手来对抗你的训练代理人。另一方面,即使你有一个非常好的训练有素的对手,这也不是一个好的解决方案,因为当对手太强时,你的代理人将如何改进其策略?

Think of a child that just started to learn soccer. Playing against a very good soccer player will be useless since it will be too hard to win or at least get the ball from time to time. So the child will continuously lose without having time to learn a good policy.

想想一个刚开始学足球的孩子。与一个非常优秀的足球运动员比赛将是毫无用处的,因为赢球或至少时不时地拿到球太难了。所以孩子没有时间学习好的政策,就会连续输掉。

The best solution would be to have an opponent that is on the same level as the agent and will upgrade its level as the agent upgrades its own. Because if the opponent is too strong, we’ll learn nothing; if it is too weak, we’ll overlearn useless behavior against a stronger opponent then.

最好的解决方案是有一个与代理处于同一级别的对手,并将随着代理升级自己的级别而升级其级别。因为如果对手太强,我们什么都学不到;如果对手太弱,我们会过度学习对抗更强对手的无用行为。

This solution is called self-play. In self-play, the agent uses former copies of itself (of its policy) as an opponent. This way, the agent will play against an agent of the same level (challenging but not too much), have opportunities to gradually improve its policy, and then update its opponent as it becomes better. It’s a way to bootstrap an opponent and progressively increase the opponent’s complexity.

这种解决方案被称为自我发挥。在自我发挥中,代理将自己(其策略)的以前副本用作对手。这样,代理人将与相同级别的代理人比赛(具有挑战性,但不会太多),有机会逐步改进其政策,然后随着对手变得更好而更新。这是一种引导对手并逐渐增加对手复杂性的方法。

It’s the same way humans learn in competition:

这与人类在竞争中学习的方式相同:

- We start to train against an opponent of similar level

- Then we learn from it, and when we acquired some skills, we can move further with stronger opponents.

We do the same with self-play:

我们开始与水平相近的对手进行训练,然后我们从中学习,当我们掌握了一些技能后,我们可以与更强大的对手走得更远。我们对自我发挥也是如此:

- We start with a copy of our agent as an opponent this way, this opponent is on a similar level.

- We learn from it, and when we acquire some skills, we update our opponent with a more recent copy of our training policy.

The theory behind self-play is not something new. It was already used by Arthur Samuel’s checker player system in the fifties and by Gerald Tesauro’s TD-Gammon in 1955. If you want to learn more about the history of self-play check this very good blogpost by Andrew Cohen

我们以这种方式从我们的代理作为对手的副本开始,这个对手处于类似的水平。我们从中学习,当我们获得一些技能时,我们会用我们训练策略的最新副本来更新我们的对手。自我发挥背后的理论并不是什么新鲜事。它已经被阿瑟·塞缪尔的跳棋系统在50年代使用,并在1955年被杰拉尔德·特索罗的TD-Gammon使用。如果你想了解更多关于自我游戏的历史,请查看安德鲁·科恩的这篇非常好的博客帖子

Self-Play in MLAgents

MLAgents中的自我发挥

Self-Play is integrated into the MLAgents library and is managed by multiple hyperparameters that we’re going to study. But the main focus as explained in the documentation is the tradeoff between the skill level and generality of the final policy and the stability of learning.

Self-play被集成到MLAgents库中,并由我们将要研究的多个超参数管理。但正如文档中解释的那样,主要关注的是最终策略的技能水平和一般性与学习稳定性之间的权衡。

Training against a set of slowly changing or unchanging adversaries with low diversity results in more stable training. But a risk to overfit if the change is too slow.

针对一组变化缓慢或变化不大、多样性较低的对手进行训练,可以得到更稳定的训练。但如果变化太慢,就有过度适应的风险。

We need then to control:

然后我们需要控制:

- How often do we change opponents with

swap_stepsandteam_changeparameters. - The number of opponents saved with

windowparameter. A larger value ofwindow

means that an agent’s pool of opponents will contain a larger diversity of behaviors since it will contain policies from earlier in the training run. - Probability of playing against the current self vs opponent sampled in the pool with

play_against_latest_model_ratio. A larger value ofplay_against_latest_model_ratio

indicates that an agent will be playing against the current opponent more often. - The number of training steps before saving a new opponent with

save_stepsparameters. A larger value ofsave_steps

will yield a set of opponents that cover a wider range of skill levels and possibly play styles since the policy receives more training.

To get more details about these hyperparameters, you definitely need to check this part of the documentation

我们多久用swap_steps和Team_change参数更换对手一次。用window参数保存对手的数量。window值越大,意味着代理的对手池将包含更大的行为多样性,因为它将包含训练运行早期的策略。与当前自我与池中采样的对手进行比赛的可能性使用play_gainst_Latest_Model_Ratio。play_gainst_Latest_Model_Ratio的值越大,表明代理将更频繁地与当前对手比赛。使用save_steps参数保存新对手之前的训练步数。由于该策略接受了更多的培训,因此较大的`save_steps‘值将生成一组覆盖更广泛的技能水平和可能的比赛风格的对手。要获取有关这些超参数的更多详细信息,您肯定需要查看文档的这一部分

The ELO Score to evaluate our agent

评估我们代理的ELO分数

What is ELO Score?

ELO分数是多少?

In adversarial games, tracking the cumulative reward is not always a meaningful metric to track the learning progress: because this metric is dependent only on the skill of the opponent.

在对抗性游戏中,跟踪累积奖励并不总是跟踪学习进度的有意义的衡量标准:因为这个衡量标准只取决于对手的技能。

Instead, we’re using an ELO rating system (named after Arpad Elo) that calculates the relative skill level between 2 players from a given population in a zero-sum game.

取而代之的是,我们使用一个ELO评级系统(以Arpad Elo命名),该系统计算零和游戏中给定人群中两名玩家之间的相对技能水平。

In a zero-sum game: one agent wins, and the other agent loses. It’s a mathematical representation of a situation in which each participant’s gain or loss of utility is exactly balanced by the gain or loss of the utility of the other participants. We talk about zero-sum games because the sum of utility is equal to zero.

在零和博弈中:一个代理赢,另一个代理输。它是对一种情况的数学表示,在这种情况下,每个参与者的效用的收益或损失与其他参与者的效用的收益或损失完全平衡。我们之所以谈论零和博弈,是因为效用和等于零。

This ELO (starting at a specific score: frequently 1200) can decrease initially but should increase progressively during the training.

这个ELO(从一个特定的分数开始:经常是1200分)最初可以降低,但在训练过程中应该逐渐增加。

The Elo system is inferred from the losses and draws against other players. It means that player ratings depend on the ratings of their opponents and the results scored against them.

ELO系统是从损失中推断出来的,并与其他玩家打平。这意味着球员的评级取决于他们对手的评级和对他们的得分结果。

Elo defines an Elo score that is the relative skills of a player in a zero-sum game. We say relative because it depends on the performance of opponents.

ELO定义了ELO分数,它是零和游戏中玩家的相对技能。我们说相对,是因为这取决于对手的表现。

The central idea is to think of the performance of a player as a random variable that is normally distributed.

其核心思想是将球员的表现视为正态分布的随机变量。

The difference in rating between 2 players serves as the predictor of the outcomes of a match. If the player wins, but the probability of winning is high, it will only win a few points from its opponent since it means that it is much stronger than it.

两个球员之间的评分差异可以预测一场比赛的结果。如果玩家赢了,但获胜的概率很高,它只会从对手那里赢得几分,因为这意味着它比自己强得多。

After every game:

每场比赛结束后:

- The winning player takes points from the losing one.

- The number of points is determined by the difference in the 2 players ratings (hence relative).

- If the higher-rated player wins → few points will be taken from the lower-rated player.

- If the lower-rated player wins → a lot of points will be taken from the high-rated player.

- If it’s a draw → the lower-rated player gains a few points from the higher.

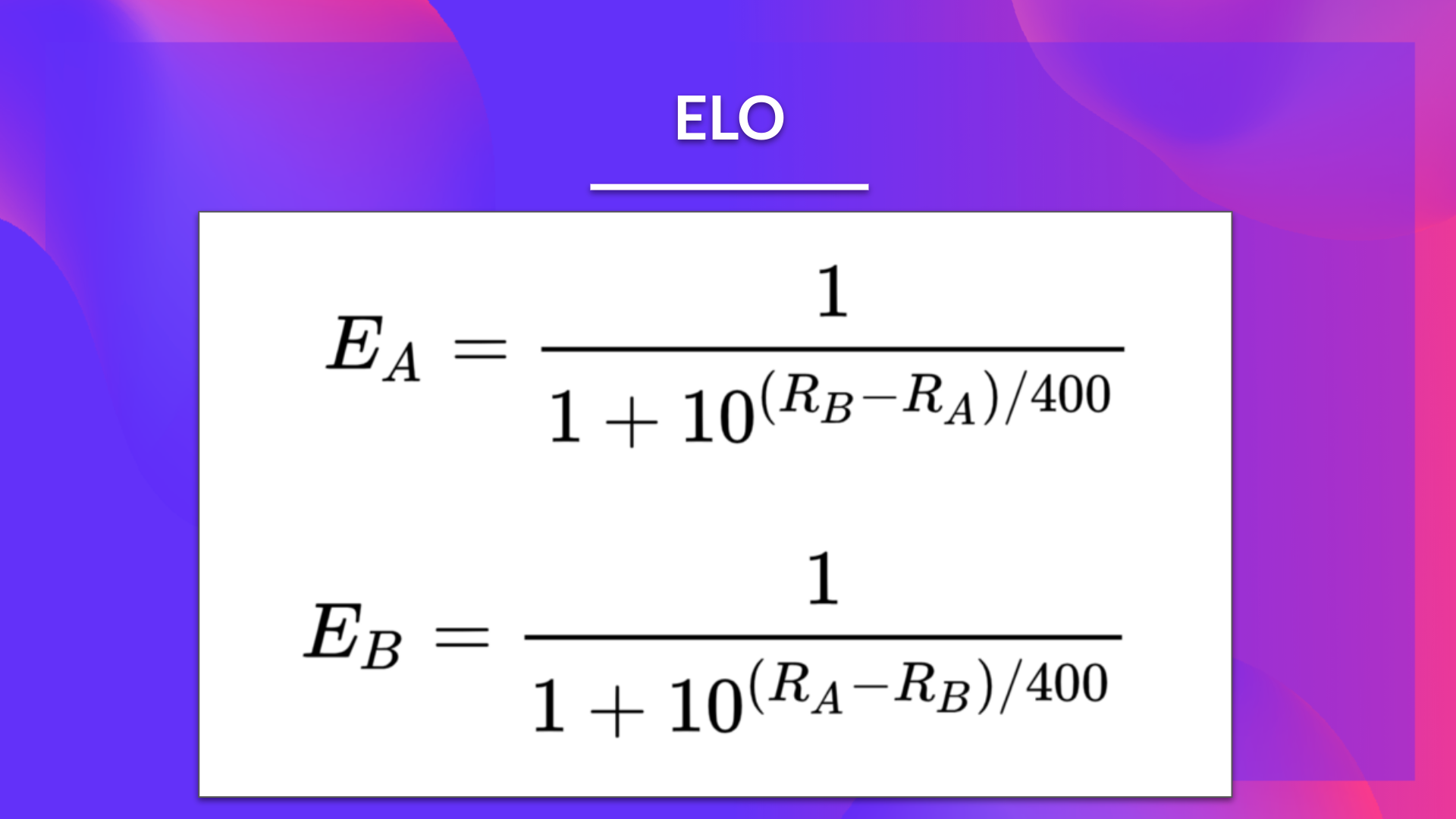

So if A and B have rating Ra, and Rb, then the expected scores are given by:

获胜的玩家从失败者那里得到分数。分数由两个玩家评分的差异决定(因此是相对的)。如果得分较高的玩家赢得→,得分将从得分较低的玩家那里得到很少。如果得分较低的玩家赢得→,得分将从得分较高的玩家那里获得很多分数。如果是平局,则得分较低的玩家从得分较高的玩家那里获得几分。因此,如果A和B的得分分别为Ra和Rb,则预期分数由以下公式给出:→

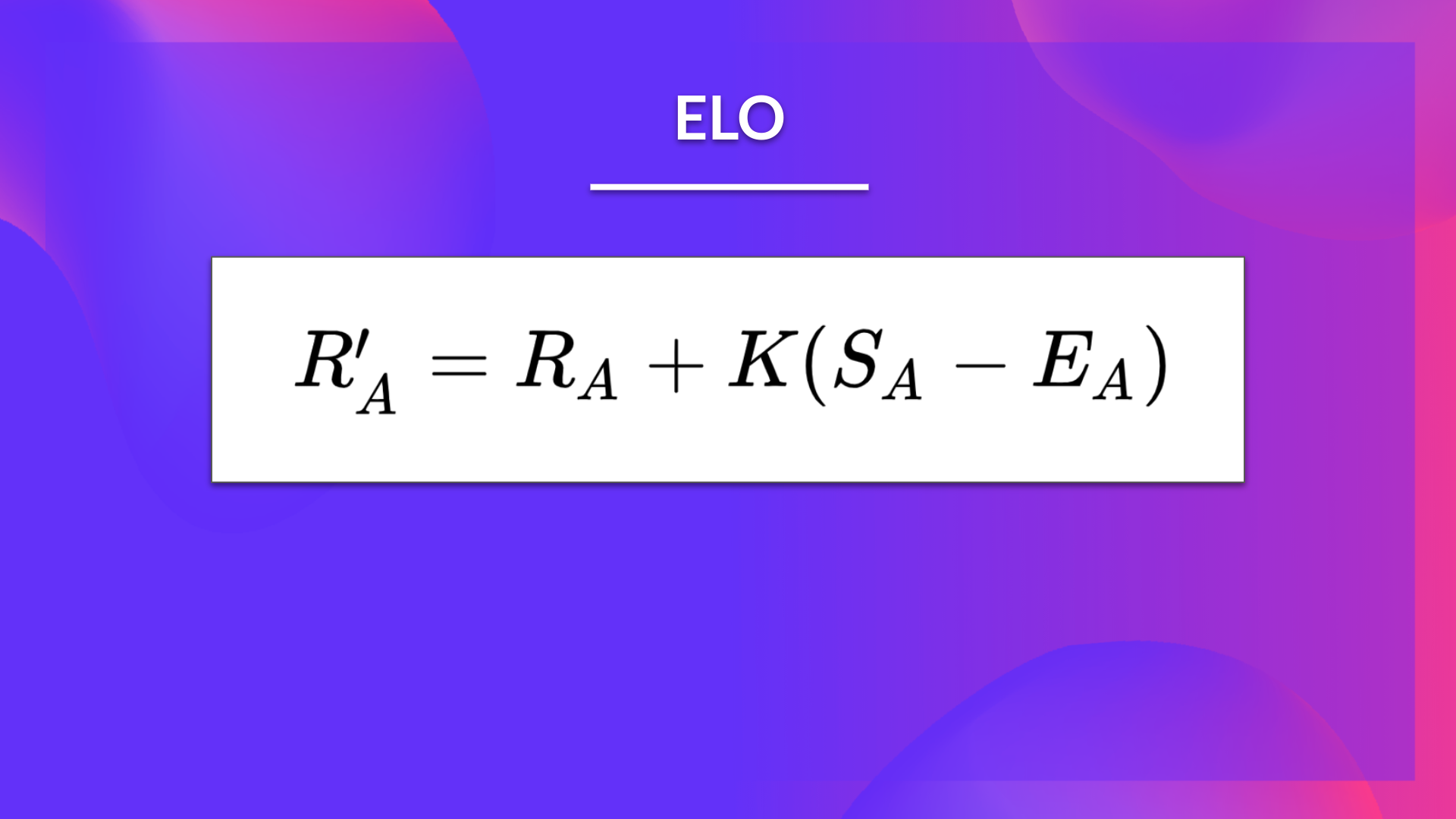

Then, at the end of the game, we need to update the player’s actual Elo score. We use a linear adjustment proportional to the amount by which the player over-performed or under-performed.

ELO得分然后,在游戏结束时,我们需要更新玩家的实际ELO得分。我们使用线性调整,与球员表现过高或表现不佳的程度成正比。

We also define a maximum adjustment rating per game: K-factor.

我们还定义了每场比赛的最大调整评级:K因子。

- K=16 for master.

- K=32 for weaker players.

If Player A has Ea points but scored Sa points, then the player’s rating is updated using the formula:

对于大师级球员,K=16。对于较弱的球员,K=32。如果球员A有EA分,但得到Sa分,则使用以下公式更新球员的评级:

ELO分数

Example

示例

If we take an example:

如果我们举个例子:

Player A has a rating of 2600

A玩家的评分为2600分

Player B has a rating of 2300

玩家B的评分为2300分

- We first calculate the expected score:

EA=11+10(2300−2600)/400=0.849E_{A} = \frac{1}{1+10^{(2300-2600)/400}} = 0.849 EA=1+10(2300−2600)/4001=0.849

我们首先计算预期分数:EA=11+10(2300−2600)/400=0.849E_{A}=\FRAC{1}{1+10^{(2300-2600)/400}}=0.849 EA=1+10(2300−2600)/4001=0.849

EB=11+10(2600−2300)/400=0.151E_{B} = \frac{1}{1+10^{(2600-2300)/400}} = 0.151 EB=1+10(2600−2300)/4001=0.151

EB=11+10(2600−2300)/400=0.151E_{B}=\FRAC{1}{1+10^{(2600-2300)/400}}=0.151 EB=1+10(2600−2300)/4001=0.151

- If the organizers determined that K=16 and A wins, the new rating would be:

ELOA=2600+16∗(1−0.849)=2602ELO_A = 2600 + 16*(1-0.849) = 2602 ELOA=2600+16∗(1−0.849)=2602

如果组织者确定K=16且A获胜,则新的评级将为:ELOA=2600+16∗(1−0.849)=2602ELO_A=2600+16*(1-0.849)=2602 ELOA=2600+16∗(1−0.849)=2602

ELOB=2300+16∗(0−0.151)=2298ELO_B = 2300 + 16*(0-0.151) = 2298 ELOB=2300+16∗(0−0.151)=2298

ELOB=2300+16∗(0−0.151)=2298ELO_B=2300+16*(0-0.151)=2298 ELOB=2300+16∗(0−0.151)=2298

- If the organizers determined that K=16 and B wins, the new rating would be:

ELOA=2600+16∗(0−0.849)=2586ELO_A = 2600 + 16*(0-0.849) = 2586 ELOA=2600+16∗(0−0.849)=2586

如果主办方确定K=16且B获胜,则新的评分将为:ELOA=2600+16∗(0−0.849)=2586ELO_A=2600+16*(0-0.849)=2586ELOA=2600+16∗(0−0.849)=2586

ELOB=2300+16∗(1−0.151)=2314ELO_B = 2300 + 16 *(1-0.151) = 2314 ELOB=2300+16∗(1−0.151)=2314

ELOB=2300+16∗(1−0.151)=2314ELO_B=2300+16*(1-0.151)=2314ELOB=2300+16∗(1−0.151)=2314

The Advantages

优势

Using ELO score has multiple advantages:

使用ELO评分有多个优点:

- Points are always balanced (more points are exchanged when there is an unexpected outcome, but the sum is always the same).

- It is a self-corrected system since if a player wins against a weak player, you will only win a few points.

- If works with team games: we calculate the average for each team and use it in Elo.

The Disadvantages

积分始终是平衡的(当出现意想不到的结果时,会交换更多的积分,但总和总是相同的)。这是一种自我纠正的系统,因为如果一名球员战胜了一名实力较弱的球员,你只会赢得几分。如果适用于团队比赛:我们计算每支球队的平均分数,并将其用于Elo.缺点

- ELO does not take the individual contribution of each people in the team.

- Rating deflation: good rating require skill over time to get the same rating.

- Can’t compare rating in history.