I8-Unit_5-Introduction_to_Unity_ML_Agents-D3-The_Pyramids_environment

中英文对照学习,效果更佳!

原课程链接:https://huggingface.co/deep-rl-course/unitbonus3/model-based?fw=pt

The Pyramid environment

金字塔环境

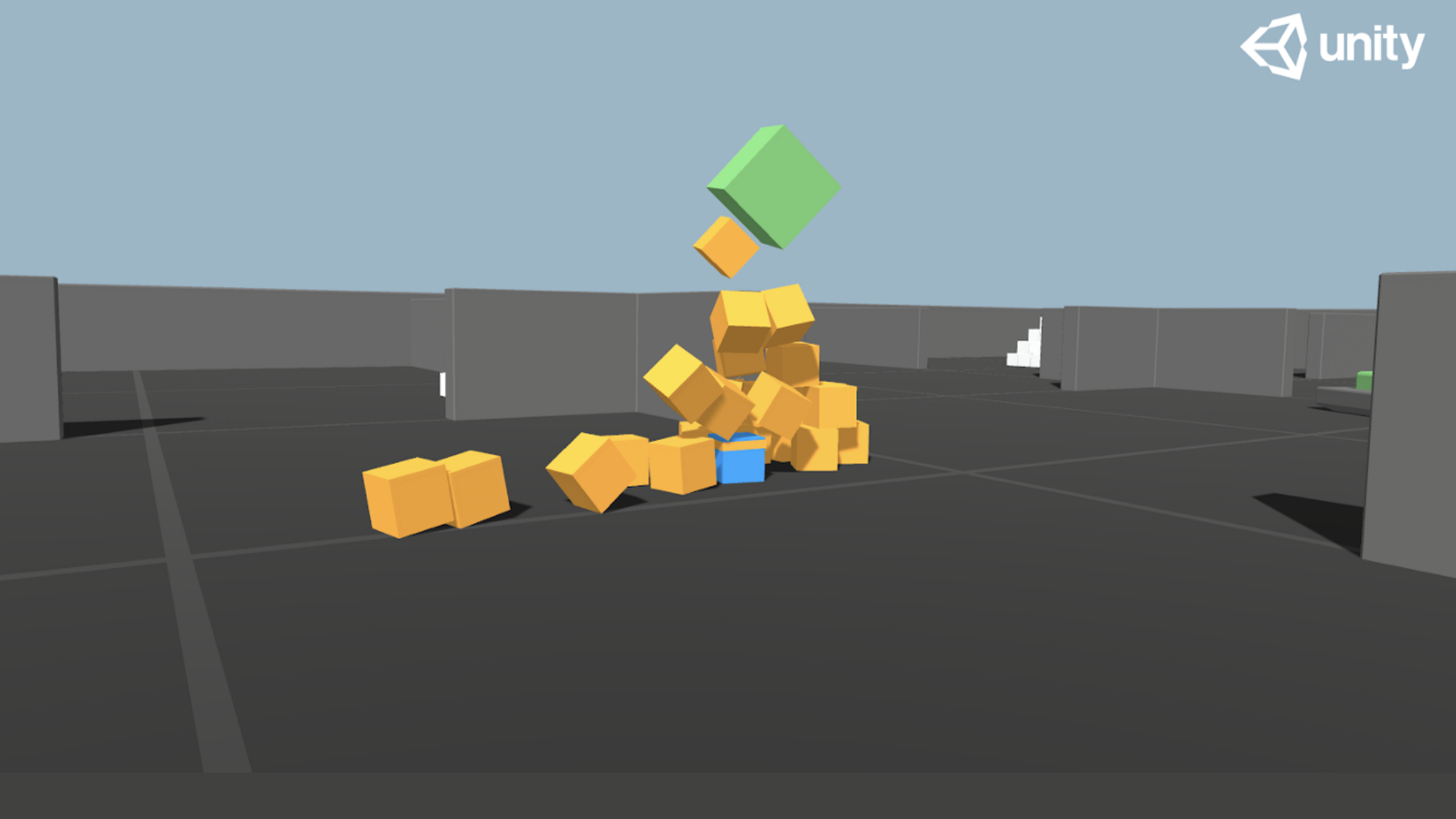

The goal in this environment is to train our agent to get the gold brick on the top of the Pyramid. To do that, it needs to press a button to spawn a Pyramid, navigate to the Pyramid, knock it over, and move to the gold brick at the top.

在这种环境下,我们的目标是训练我们的特工获得金字塔顶端的金砖。要做到这一点,它需要按下一个按钮来产生金字塔,导航到金字塔,将其击倒,然后移动到顶部的金砖。

金字塔环境

The reward function

奖励函数

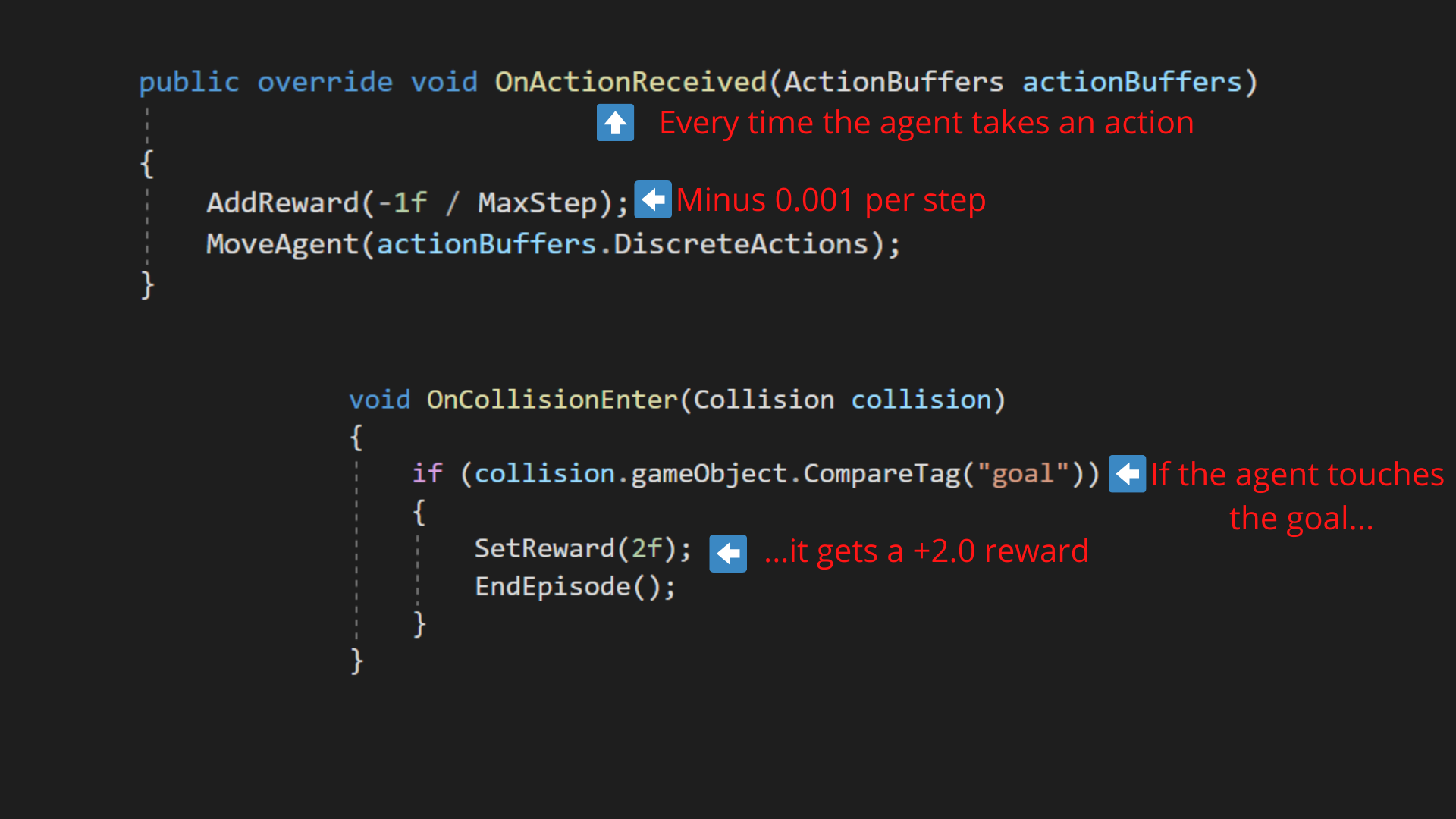

The reward function is:

奖励功能为:

In terms of code, it looks like this

金字塔环境在代码方面,它看起来像这样

To train this new agent that seeks that button and then the Pyramid to destroy, we’ll use a combination of two types of rewards:

金字塔奖励训练这个寻找按钮的新特工,然后摧毁金字塔,我们将使用两种奖励的组合:

- The extrinsic one given by the environment (illustration above).

- But also an intrinsic one called curiosity. This second will push our agent to be curious, or in other terms, to better explore its environment.

If you want to know more about curiosity, the next section (optional) will explain the basics.

由环境赋予的外在因素(如上图所示)。但也是内在因素,称为好奇心。这第二个将促使我们的代理好奇,或者换句话说,更好地探索它的环境。如果您想了解更多关于好奇心的信息,下一节(可选)将解释基本知识。

The observation space

观察空间

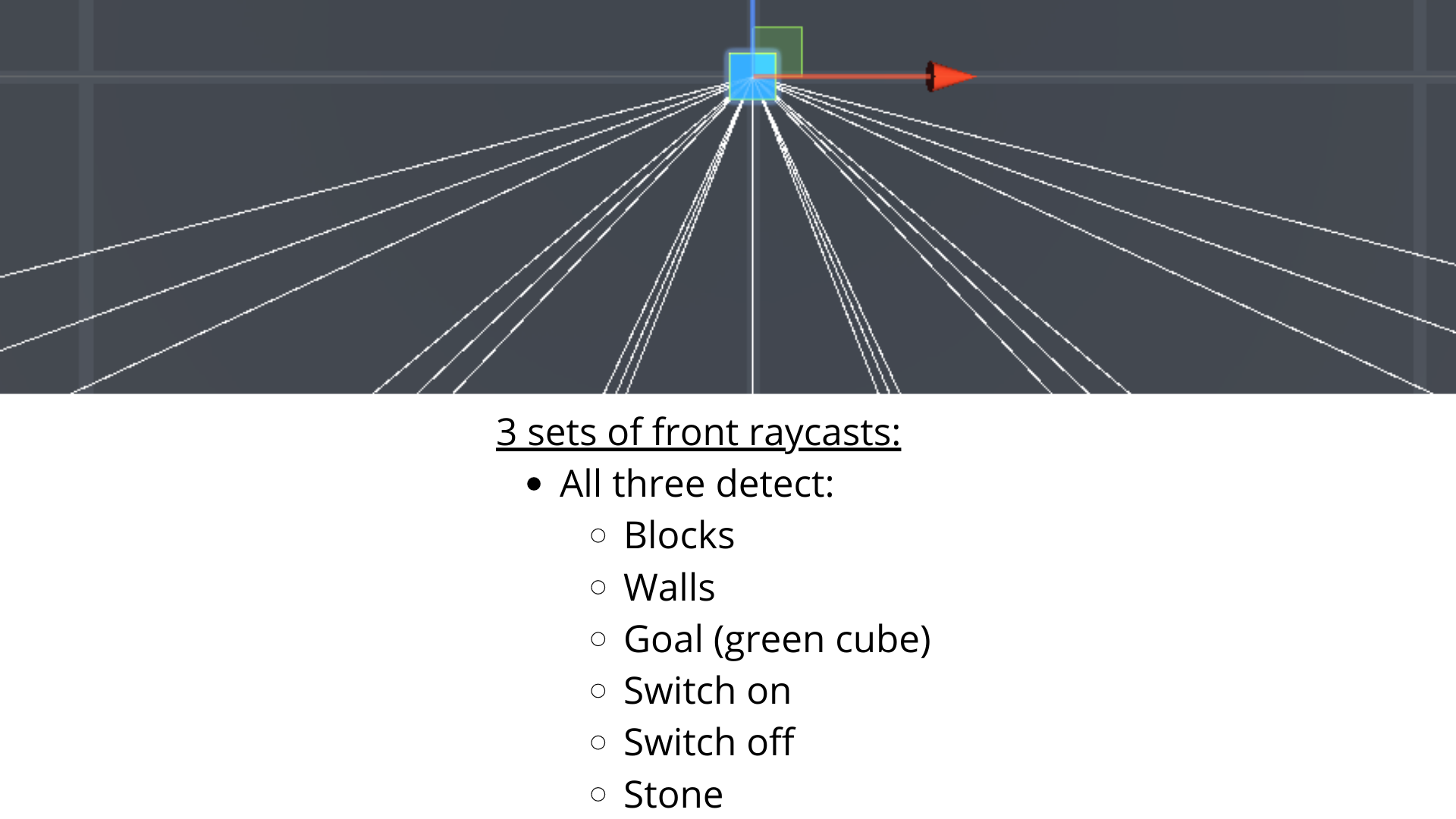

In terms of observation, we use 148 raycasts that can each detect objects (switch, bricks, golden brick, and walls.)

在观察方面,我们使用148个光线投射,每个光线投射都可以检测对象(开关、砖、金砖和墙)。

We also use a boolean variable indicating the switch state (did we turn on or off the switch to spawn the Pyramid) and a vector that contains the agent’s speed.

我们还使用了一个表示开关状态的布尔变量(我们打开还是关闭了开关以生成金字塔)和一个包含代理速度的向量。

金字塔OBS代码

The action space

动作空间

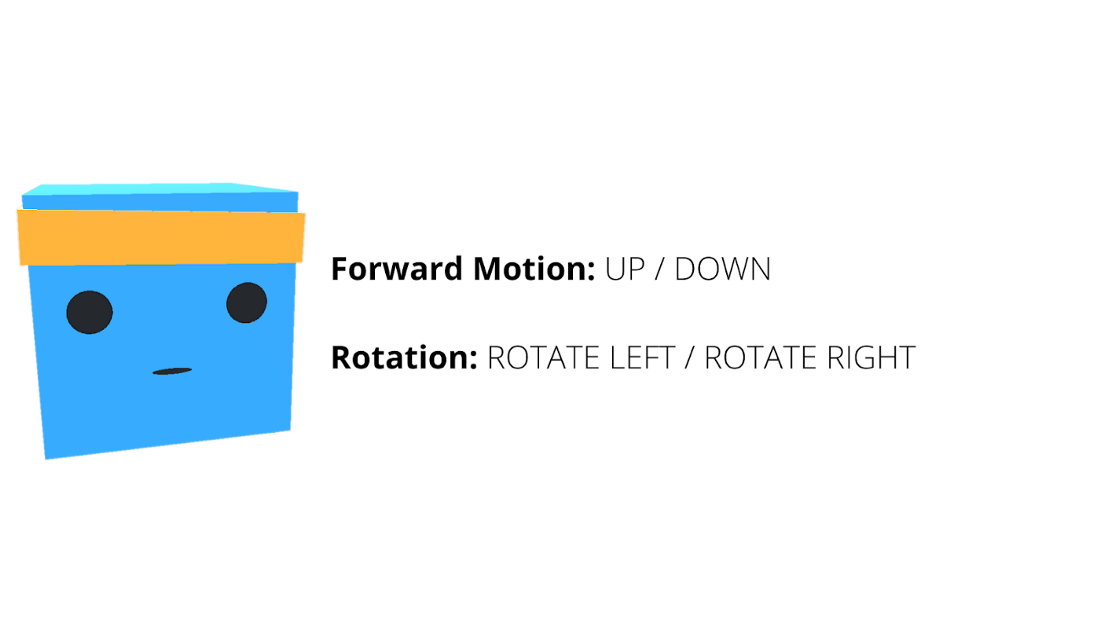

The action space is discrete with four possible actions:

动作空间是离散的,有四种可能的动作:

金字塔环境