H7-Unit_4-Policy_Gradient_with_PyTorch-G6-Quiz

中英文对照学习,效果更佳!

原课程链接:https://huggingface.co/deep-rl-course/unit8/conclusion?fw=pt

Quiz

小测验

The best way to learn and to avoid the illusion of competence is to test yourself. This will help you to find where you need to reinforce your knowledge.

学习和避免能力幻觉的最好方法是测试自己。这将帮助你找到你需要加强知识的地方。

Q1: What are the advantages of policy-gradient over value-based methods? (Check all that apply)

问1:与基于价值的方法相比,政策梯度方法有哪些优势?(请勾选所有适用项)

Policy-gradient methods can learn a stochastic policy

策略梯度法可以学习随机策略

Policy-gradient methods are more effective in high-dimensional action spaces and continuous actions spaces

策略梯度方法在高维动作空间和连续动作空间中更有效

Policy-gradient converges most of the time on a global maximum.

策略梯度在大多数情况下收敛于全局最大值。

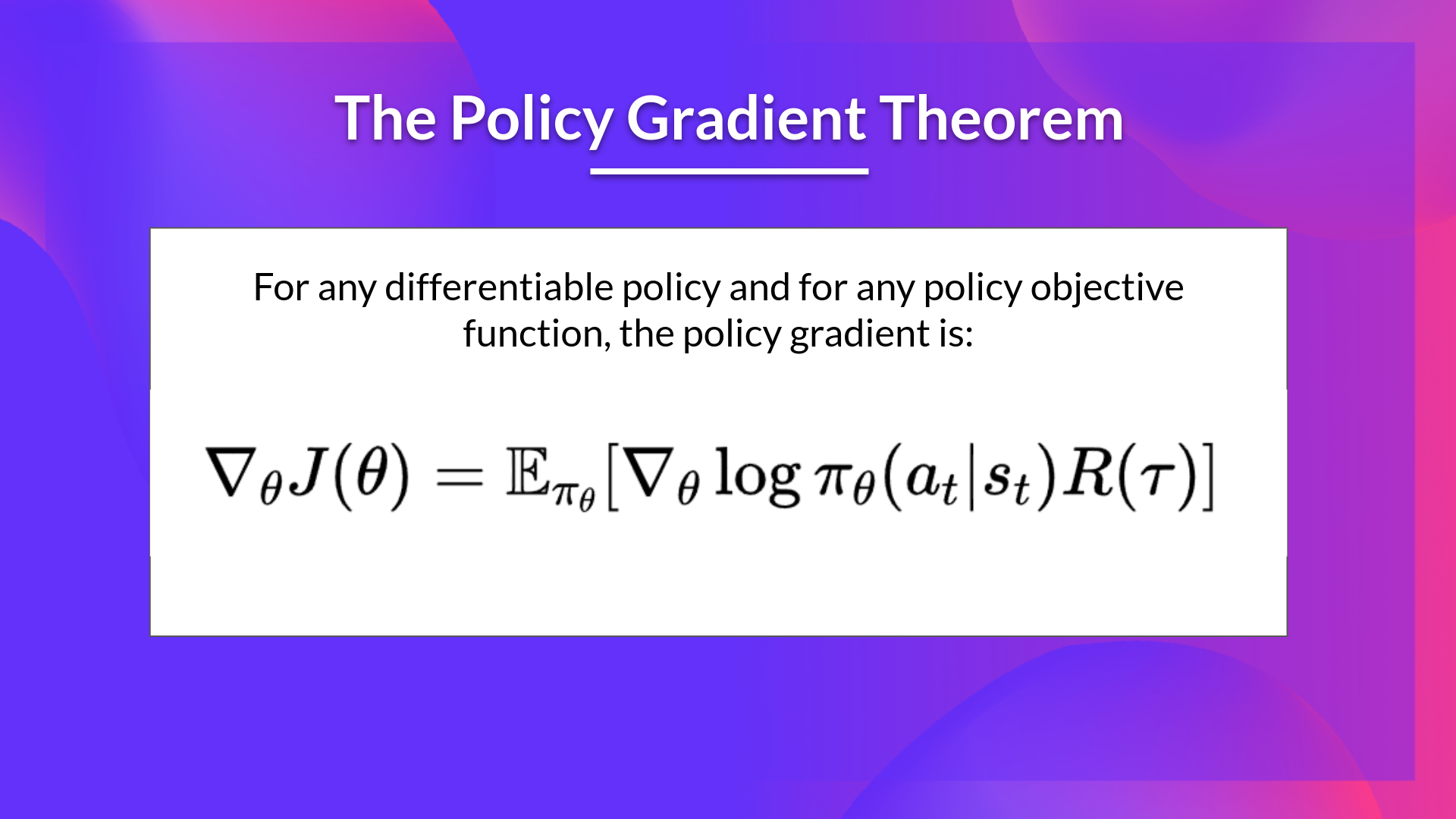

Q2: What is the Policy Gradient Theorem?

问2:什么是政策梯度定理?

Solution

The Policy Gradient Theorem is a formula that will help us to reformulate the objective function into a differentiable function that does not involve the differentiation of the state distribution.

解决方案政策梯度定理是一个公式,它将帮助我们将目标函数重新表示为一个可微函数,该函数不涉及状态分布的微分。

政策梯度

Q3: What’s the difference between policy-based methods and policy-gradient methods? (Check all that apply)

问3:基于政策的方法和基于政策的方法有什么不同?(请勾选所有适用项)

Policy-based methods are a subset of policy-gradient methods.

基于策略的方法是策略梯度方法的子集。

Policy-gradient methods are a subset of policy-based methods.

策略梯度方法是基于策略的方法的子集。

In Policy-based methods, we can optimize the parameter θ indirectly by maximizing the local approximation of the objective function with techniques like hill climbing, simulated annealing, or evolution strategies.

在基于策略的方法中,我们可以通过爬山、模拟退火法或进化策略最大化目标函数的局部近似值来间接优化参数θ。

In Policy-gradient methods, we optimize the parameter θ directly by performing the gradient ascent on the performance of the objective function.

在策略梯度方法中,我们通过对目标函数的性能进行梯度上升来直接优化参数θ。

Q4: Why do we use gradient ascent instead of gradient descent to optimize J(θ)?

问题4:为什么我们使用梯度上升而不是梯度下降来优化J(θ)?

We want to minimize J(θ) and gradient ascent gives us the gives the direction of the steepest increase of J(θ)

我们想要最小化J(θ),而梯度上升给出了J(θ)最陡峭增长的方向

We want to maximize J(θ) and gradient ascent gives us the gives the direction of the steepest increase of J(θ)

我们想要最大化J(θ),而梯度上升给出了J(θ)最陡峭增长的方向

Congrats on finishing this Quiz 🥳, if you missed some elements, take time to read the chapter again to reinforce (😏) your knowledge.

祝贺你完成了这次测验🥳,如果你错过了一些元素,请花时间再读一遍这一章,以巩固(😏)你的知识。