H7-Unit_4-Policy_Gradient_with_PyTorch-E4-(Optional)_the_Policy_Gradient_Theorem

中英文对照学习,效果更佳!

原课程链接:https://huggingface.co/deep-rl-course/unit8/visualize?fw=pt

(Optional) the Policy Gradient Theorem

(可选)政策梯度定理

In this optional section where we’re going to study how we differentiate the objective function that we will use to approximate the policy gradient.

在这个可选的部分中,我们将学习如何区分目标函数,我们将使用该函数来近似政策梯度。

Let’s first recap our different formulas:

让我们首先回顾一下我们的不同公式:

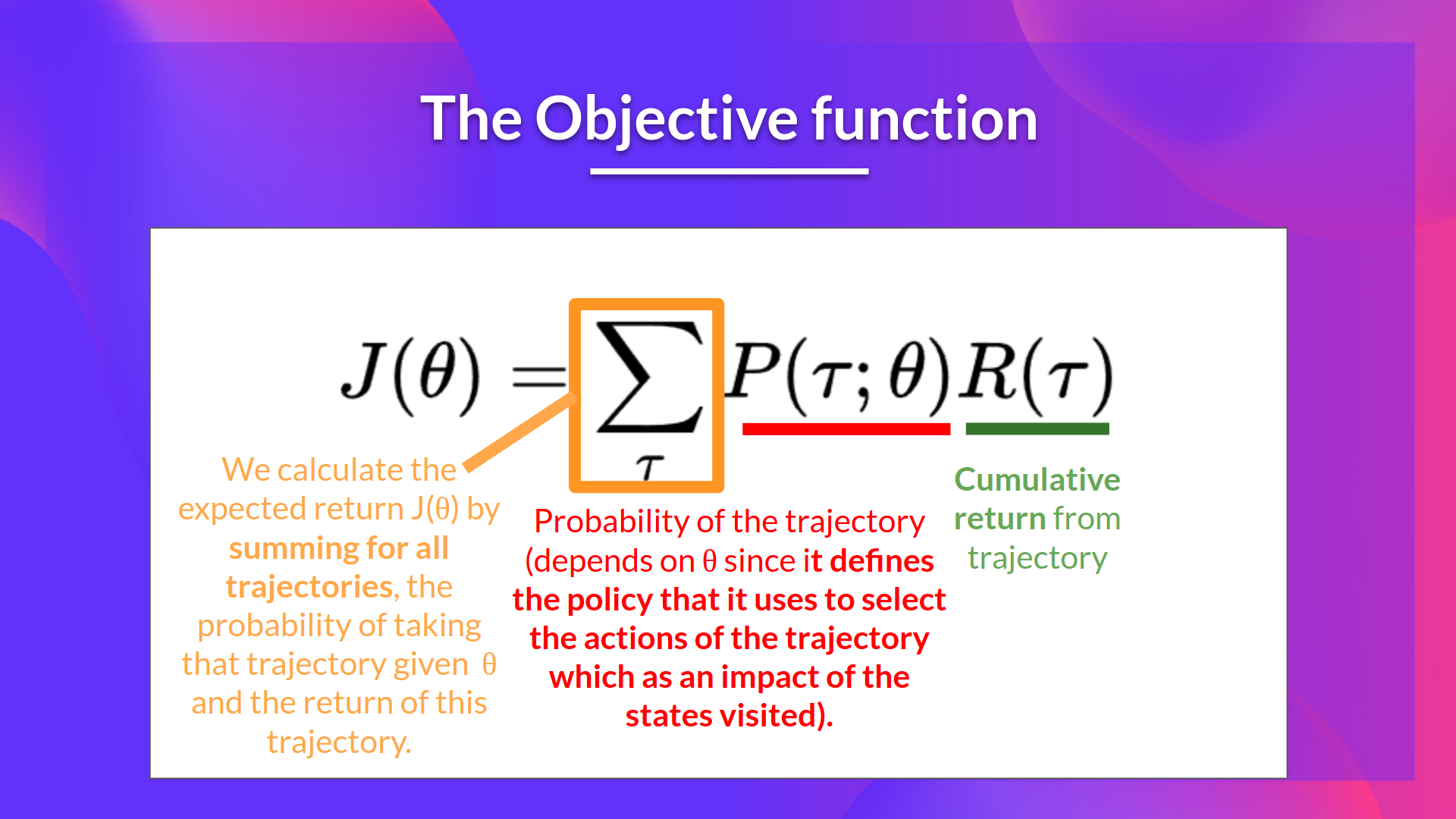

- The Objective function

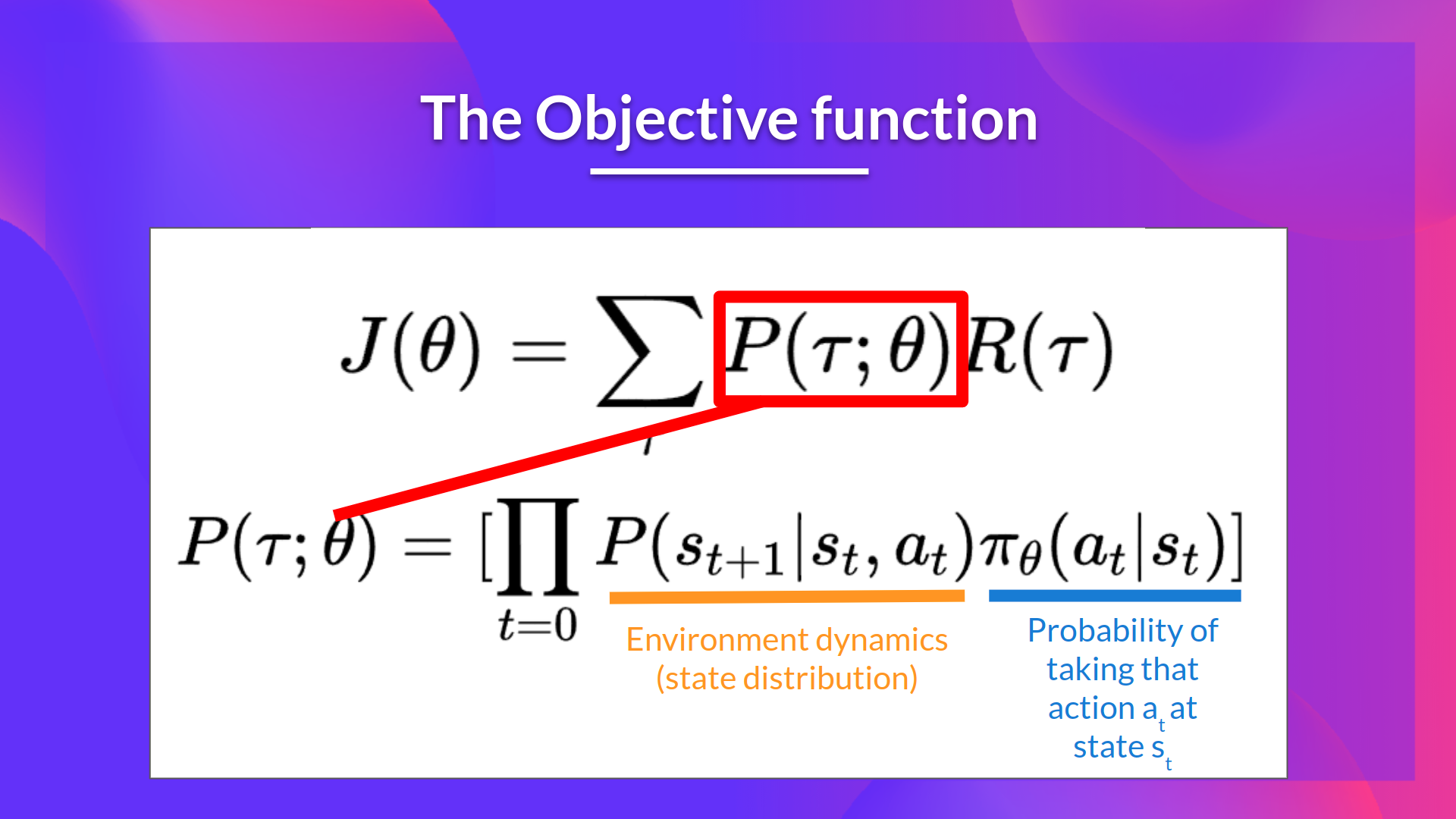

2. The probability of a trajectory (given that action comes from πθ\pi_\thetaπθ):

目标函数2.轨迹的概率(假设操作来自πθ\pi_\thetaπθReturn):

So we have:

概率,所以我们有:

∇θJ(θ)=∇θ∑τP(τ;θ)R(τ)\nabla_\theta J(\theta) = \nabla_\theta \sum_{\tau}P(\tau;\theta)R(\tau)∇θJ(θ)=∇θ∑τP(τ;θ)R(τ)

∇∇∑θJ(θ)=θτP(τ;θ)R(τ)\nabla_\theta J(\theta)=\nabla_\theta

We can rewrite the gradient of the sum as the sum of the gradient:

我们可以将和的梯度重写为梯度的和:

=∑τ∇θP(τ;θ)R(τ) = \sum_{\tau} \nabla_\theta P(\tau;\theta)R(\tau) =∑τ∇θP(τ;θ)R(τ)

=∑∇τθP(τ;θ)R(τ)=\∑_{\tau}\nabla_\theta P(\tau;\theta)R(\tau)=∇τθP(τ;θ)R(τ)=τθSUMτ;θP(τ)

We then multiply every term in the sum by P(τ;θ)P(τ;θ)\frac{P(\tau;\theta)}{P(\tau;\theta)}P(τ;θ)P(τ;θ)(which is possible since it’s = 1)

然后我们将总和中的每一项乘以P(τ;θ)P(τ;θ)\frac{P(\tau;\theta)}{P(\tau;\theta)}P(τ;θ)P(τ;θ)(which是可能的,因为它=1)

=∑τP(τ;θ)P(τ;θ)∇θP(τ;θ)R(τ) = \sum_{\tau} \frac{P(\tau;\theta)}{P(\tau;\theta)}\nabla_\theta P(\tau;\theta)R(\tau) =∑τP(τ;θ)P(τ;θ)∇θP(τ;θ)R(τ)

=\frac{P(\tau;\theta)}{P(\tau;\theta)}\nabla_\theta P(∑)∇θP(τ;θ)P(τ)P(τ;θ)θP(τ;θ)R(τ)=∇τP(τ;θ)R(τ;θ)=∑τP(τ;θ)P(τ;θ)θτ;θP(τ)

We can simplify further this since P(τ;θ)P(τ;θ)∇θP(τ;θ)=P(τ;θ)∇θP(τ;θ)P(τ;θ) \frac{P(\tau;\theta)}{P(\tau;\theta)}\nabla_\theta P(\tau;\theta) = P(\tau;\theta)\frac{\nabla_\theta P(\tau;\theta)}{P(\tau;\theta)} P(τ;θ)P(τ;θ)∇θP(τ;θ)=P(τ;θ)P(τ;θ)∇θP(τ;θ)

由于P(\frac{P(\tau;\theta)}{P(\tau;\theta)}\nabla_\theta)P(τ;θ)∇P(τ;θ)=P(τ;θ)∇θP(τ;θ)=P(τ;θ)θP(τ;θ)=P(τ;θ;\τ;θ)\τ;θ{\nabla_\theta P(\tau;\theta)}{P(\tau;\theta)}P(τ;θ)P(τ;∇P(θ)=P(θτ;θ)P(τ;θ)∇θτ;θP(τ;θ)(θ)θτ;θP(τ;θ)=P(τ;θ)θτ;θP(τ;θ)θθτ;θP(τ;θ)=P(τ;θ)θτ;θ

=∑τP(τ;θ)∇θP(τ;θ)P(τ;θ)R(τ)= \sum_{\tau} P(\tau;\theta) \frac{\nabla_\theta P(\tau;\theta)}{P(\tau;\theta)}R(\tau) =∑τP(τ;θ)P(τ;θ)∇θP(τ;θ)R(τ)

=∑∇P(∑)P(τ)P(τ;θ)P(τ;θ)R(τ)=\_{\tau}P(\tau;\theta)\{τ{\nabla_\theta P(\tau;\theta)}{P(\tau;\theta)}R(\tau)=∇θP(τ)P(ττ;θ)P(τ;θ)θτ;θττ;θP(τ;θ)

We can then use the derivative log trick (also called likelihood ratio trick or REINFORCE trick), a simple rule in calculus that implies that ∇xlogf(x)=∇xf(x)f(x) \nabla_x log f(x) = \frac{\nabla_x f(x)}{f(x)} ∇xlogf(x)=f(x)∇xf(x)

然后我们可以使用导数对数技巧(也称为似然比技巧或强化技巧),这是微积分中的一个简单规则,它意味着∇xlogf(X)=∇xf(X)f(X)\nabla_xlogf(X)=\∇{\nabla_x f(X)}{f(X)}∇xlogf(X)=f(X)∇xf(X)

So given we have ∇θP(τ;θ)P(τ;θ)\frac{\nabla_\theta P(\tau;\theta)}{P(\tau;\theta)} P(τ;θ)∇θP(τ;θ) we transform it as ∇θlogP(τ∣θ)\nabla_\theta log P(\tau|\theta) ∇θlogP(τ∣θ)

因此,假设我们有∇∇P(τ;θ)P(τ;θ)\FRAC{\nabla_\theta P(\tau;\theta)}{P(\tau;\theta)}P(τ;θ)∣∇∣θP(τ;θ),我们将其转换为τLog P(τ;θθτθ)\nabla_\thetaθP(\tau|\theta)∇θτθ(τLogθ)

So this is our likelihood policy gradient:

因此,这就是我们的可能性政策梯度:

∇θJ(θ)=∑τP(τ;θ)∇θlogP(τ;θ)R(τ) \nabla_\theta J(\theta) = \sum_{\tau} P(\tau;\theta) \nabla_\theta log P(\tau;\theta) R(\tau) ∇θJ(θ)=∑τP(τ;θ)∇θlogP(τ;θ)R(τ)

∇∑∇J(θ)=∑∇∇P(θ)R(τ;θ)R(τ)\nabla_\θJ(\θ)=\τ_{\tau}P(\tau;\theta)\nabla_\thetaP(\tau;\θ)R(\tau)∇θlogP(θ)=∑∇θθ(τ;θ)θτ;θlogP(τ;θ)R(τ)

Thanks for this new formula, we can estimate the gradient using trajectory samples (we can approximate the likelihood ratio policy gradient with sample-based estimate if you prefer).

多亏了这个新的公式,我们可以使用轨迹样本来估计梯度(如果你愿意,我们可以用基于样本的估计来近似似然比策略梯度)。

∇θJ(θ)=1m∑i=1m∇θlogP(τ(i);θ)R(τ(i))\nabla_\theta J(\theta) = \frac{1}{m} \sum^{m}{i=1} \nabla\theta log P(\tau^{(i)};\theta)R(\tau^{(i)})∇θJ(θ)=m1∑i=1m∇θlogP(τ(i);θ)R(τ(i)) where each τ(i)\tau^{(i)}τ(i) is a sampled trajectory.

∇θJ(θ)=1m∑i=1m∇θlogP(τ(i);θ)R(τ(i))\nabla_\theta J(\theta)=\FRAC{1}{m}\sum^{m}{i=1}\nabla\theta P(\tau^{(i)};\theta)R(\tau^{(i)})∇θJ(θ)=m1∑i=1m∇θlogP(τ(i);θ)R(τ(I)),其中每个τ(I)\tau^{(I)}τ(I)是一个采样轨迹。

But we still have some mathematics work to do there: we need to simplify ∇θlogP(τ∣θ) \nabla_\theta log P(\tau|\theta) ∇θlogP(τ∣θ)

但我们仍有一些数学工作要做:我们需要简化∇∣∇(∣θ)\nabla_\τθP(\tau|\theta)θτlogP(∣θθ)。

We know that:

我们知道:

∇θlogP(τ(i);θ)=∇θlog[μ(s0)∏t=0HP(st+1(i)∣st(i),at(i))πθ(at(i)∣st(i))]\nabla_\theta log P(\tau^{(i)};\theta)= \nabla_\theta log[ \mu(s_0) \prod_{t=0}^{H} P(s_{t+1}^{(i)}|s_{t}^{(i)}, a_{t}^{(i)}) \pi_\theta(a_{t}^{(i)}|s_{t}^{(i)})]∇θlogP(τ(i);θ)=∇θlog[μ(s0)∏t=0HP(st+1(i)∣st(i),at(i))πθ(at(i)∣st(i))]

∇θlogP(τ(i);θ)=∇θlog[μ(s0)∏t=0HP(st+1(i)∣st(i),at(I))Log(at(I)∣st(I))]\nabla_\πθP(tau^{(I)};\theta)=\nabla_\theta日志[\mU(S_0)\prod_{t=0}^{H}P(s_{t+1}^{(I)}|s_{t}^{(I)},a_{t}^{(I)})\pi_\theta(a_{t}^{(i)}|s_{t}^{(i)})]∇θlogP(τ(i);Log)=∇(S0)∏t=0HP(st+1(I)∣st(I),at(I))at(I)(at(I)∣st(I))][θ(s0θ)μ(s0πθ)t=0HθP(st+1(I)∣st(I),at(I))at(I)θμ(at(I)πθst(I)θ)]

Where μ(s0)\mu(s_0)μ(s0) is the initial state distribution and P(st+1(i)∣st(i),at(i)) P(s_{t+1}^{(i)}|s_{t}^{(i)}, a_{t}^{(i)}) P(st+1(i)∣st(i),at(i)) is the state transition dynamics of the MDP.

P(st+1(I)∣st(I),at(I))P(s_{t+1}^{(I)}|s_{t}^{(I)},a_{t}^{(I)})P(st+1(I)∣st(I)μ,at(I))是μ的状态转变动力学。

We know that the log of a product is equal to the sum of the logs:

我们知道一个产品的对数等于这些对数之和:

∇θlogP(τ(i);θ)=∇θ[logμ(s0)+∑t=0HlogP(st+1(i)∣st(i)at(i))+∑t=0Hlogπθ(at(i)∣st(i))]\nabla_\theta log P(\tau^{(i)};\theta)= \nabla_\theta \left[log \mu(s_0) + \sum\limits_{t=0}^{H}log P(s_{t+1}^{(i)}|s_{t}^{(i)} a_{t}^{(i)}) + \sum\limits_{t=0}^{H}log \pi_\theta(a_{t}^{(i)}|s_{t}^{(i)})\right] ∇θlogP(τ(i);θ)=∇θ[logμ(s0)+t=0∑HlogP(st+1(i)∣st(i)at(i))+t=0∑Hlogπθ(at(i)∣st(i))]

∇θlogP(τ(i);θ)=∇θ[logμ(s0)+∑t=0HlogP(st+1(i)∣st(i)at(i))+∑t=0Hlogπθ(at(i)∣st(i))]\nabla_\theta LOG P(\tau^{(I)};\theta)=\nabla_\theta\Left[log\u(S_0)+\sum\Limits_{t=0}^{H}log P(s_{t+1}^{(I)}|s_{t}^{(I)}a_{t}^{(I)})+\sum\Limits_{t=0}^{H}log\pi_\theta(a_{t}^{(I)}|s_{t}^{(I)})\right]∇θlogP(τ(I));θ)=∇θ[logμ(s0)+t=0∑HlogP(st+1(i)∣st(i)at(i))+t=0∑Hlogπθ(at(i)∣st(i))]

We also know that the gradient of the sum is equal to the sum of gradient:

我们还知道,和的梯度等于梯度的和:

∇θlogP(τ(i);θ)=∇θlogμ(s0)+∇θ∑t=0HlogP(st+1(i)∣st(i)at(i))+∇θ∑t=0Hlogπθ(at(i)∣st(i)) \nabla_\theta log P(\tau^{(i)};\theta)=\nabla_\theta log\mu(s_0) + \nabla_\theta \sum\limits_{t=0}^{H} log P(s_{t+1}^{(i)}|s_{t}^{(i)} a_{t}^{(i)}) + \nabla_\theta \sum\limits_{t=0}^{H} log \pi_\theta(a_{t}^{(i)}|s_{t}^{(i)}) ∇θlogP(τ(i);θ)=∇θlogμ(s0)+∇θt=0∑HlogP(st+1(i)∣st(i)at(i))+∇θt=0∑Hlogπθ(at(i)∣st(i))

∇θlogP(τ(i);θ)=∇θlogμ(s0)+∇θ∑t=0HlogP(st+1(i)∣st(i)at(i))+∇θ∑t=0Hlogπθ(at(i)∣st(i))theta log P(tau^{(I)};\theta)=\nabla_\theta log\mU(S_0)+\nabla_\theta\sum\Limits{t=0}^{H}log P(s_{t+1}^{(I)}|s_{t}^{(I)}a_{t}^{(I)})+\nabla_\theta\sum\Limits_{t=0}^{H}log\pi_\theta(a_{t}^{(I)}|s_{t}^{(I)})∇θlogP(τ(I));θ)=∇θlogμ(s0)+∇θt=0∑HlogP(st+1(i)∣st(i)at(i))+∇θt=0∑Hlogπθ(at(i)∣st(i))

Since neither initial state distribution or state transition dynamics of the MDP are dependent of θ\thetaθ, the derivate of both terms are 0. So we can remove them:

由于MDP的初始态分布和状态转变动力学都不依赖于θ\thetaθ,所以这两项的导数都是0。这样我们就可以移除它们:

Since:

∇θ∑t=0HlogP(st+1(i)∣st(i)at(i))=0\nabla_\theta \sum_{t=0}^{H} log P(s_{t+1}^{(i)}|s_{t}^{(i)} a_{t}^{(i)}) = 0 ∇θ∑t=0HlogP(st+1(i)∣st(i)at(i))=0 and ∇θμ(s0)=0 \nabla_\theta \mu(s_0) = 0∇θμ(s0)=0

自:∇θ∑t=0HlogP(st+1(i)∣st(i)at(i))=0\nabla_\theta\sum_{t=0}^{H}log P(s_{t+1}^{(I)}|s_{t}^{(I)}a_{t}^{(I)})=0∇∑t=0HlogP(st+1(I)∣st(I)at(I))=0∇θ(S0)=0\nabla_\θμ\u(s_0)=0∇(S0θμ)=0

∇θlogP(τ(i);θ)=∇θ∑t=0Hlogπθ(at(i)∣st(i))\nabla_\theta log P(\tau^{(i)};\theta) = \nabla_\theta \sum_{t=0}^{H} log \pi_\theta(a_{t}^{(i)}|s_{t}^{(i)})∇θlogP(τ(i);θ)=∇θ∑t=0Hlogπθ(at(i)∣st(i))

∇θlogP(τ(i);θ)=∇θ∑t=0Hlogπθ(at(i)∣st(i))\nabla_\theta Log P(\tau^{(I)};\theta)=\nabla_\theta\Sum_{t=0}^{H}Log\pi_\theta(a_{t}^{(i)}|s_{t}^{(i)})∇θlogP(τ(i);θ)=∇θ∑t=0Hlogπθ(at(i)∣st(i))

We can rewrite the gradient of the sum as the sum of gradients:

我们可以将和的梯度重写为梯度之和:

∇θlogP(τ(i);θ)=∑t=0H∇θlogπθ(at(i)∣st(i)) \nabla_\theta log P(\tau^{(i)};\theta)= \sum_{t=0}^{H} \nabla_\theta log \pi_\theta(a_{t}^{(i)}|s_{t}^{(i)}) ∇θlogP(τ(i);θ)=∑t=0H∇θlogπθ(at(i)∣st(i))

∇θlogP(τ(i);θ)=∑t=0H∇θlogπθ(at(i)∣st(i))∇θlogP(τ(I);θ)=∇t=0Hθτθ(at(I)∣st(I))\nabla_\thetaπθP(\tau^{(I)};\theta)=\sum_{t=0}^{H}\nabla_\theta log(a_{t}^{(I)}|s_{t}^{(I)})

So, the final formula for estimating the policy gradient is:

因此,估计政策梯度的最终公式是:

∇θJ(θ)=g^=1m∑i=1m∑t=0H∇θlogπθ(at(i)∣st(i))R(τ(i)) \nabla_{\theta} J(\theta) = \hat{g} = \frac{1}{m} \sum^{m}{i=1} \sum^{H}{t=0} \nabla_\theta \log \pi_\theta(a^{(i)}{t} | s{t}^{(i)})R(\tau^{(i)}) ∇θJ(θ)=g^=m1∑i=1m∑t=0H∇θlogπθ(at(i)∣st(i))R(τ(i))

∇θJ(θ)=g^=1m∑i=1m∑t=0H∇θlogπθ(at(i)∣st(i))R(τ(i)){g}=\FRAC{1}{m}\sum^{m}{i=1}\sum^{H}{t=0}\nabla_\theta\Log\pi_\theta(a^{(I)}{t}|s{t}^{(I)})R(\tau^{(i)})∇θJ(θ)=g^=m1∑i=1m∑t=0H∇θlogπθ(at(i)∣st(i))R(τ(i))