F5-Unit_3-Deep_Q_Learning_with_Atari_Games-G6-Quiz

中英文对照学习,效果更佳!

原课程链接:https://huggingface.co/deep-rl-course/unit7/introduction?fw=pt

Quiz

小测验

The best way to learn and to avoid the illusion of competence is to test yourself. This will help you to find where you need to reinforce your knowledge.

学习和避免能力幻觉的最好方法是测试自己。这将帮助你找到你需要加强知识的地方。

Q1: We mentioned Q Learning is a tabular method. What are tabular methods?

问1:我们提到Q学习是一种表格方法。什么是表格法?

Solution

Tabular methods is a type of problem in which the state and actions spaces are small enough to approximate value functions to be represented as arrays and tables. For instance, Q-Learning is a tabular method since we use a table to represent the state, and action value pairs.

解表方法是这样一类问题,其中状态和动作空间足够小,以近似表示为数组和表的值函数。例如,Q-Learning是一种表格方法,因为我们使用表格来表示状态和动作值对。

Q2: Why can’t we use a classical Q-Learning to solve an Atari Game?

问2:为什么我们不能用经典的Q学习来解决雅达利游戏?

Atari environments are too fast for Q-Learning

雅达利的环境太快了,不适合Q-Learning

Atari environments have a big observation space. So creating an updating the Q-Table would not be efficient

雅达利的环境有很大的观察空间。因此,创建Q表的更新将不会有效率

Q3: Why do we stack four frames together when we use frames as input in Deep Q-Learning?

问题3:当我们在深度Q-学习中使用框架作为输入时,为什么我们将四个框架堆叠在一起?

Solution

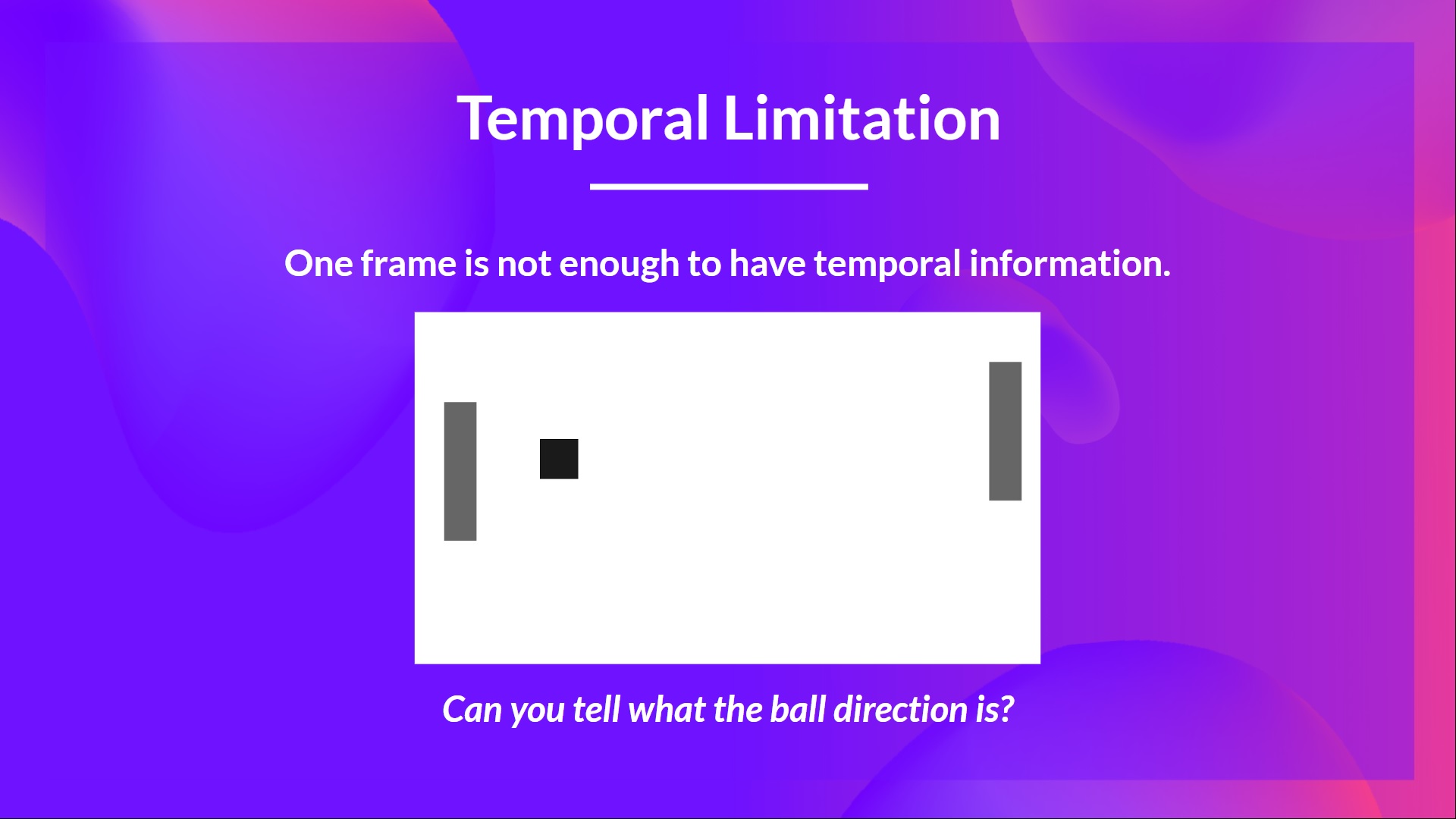

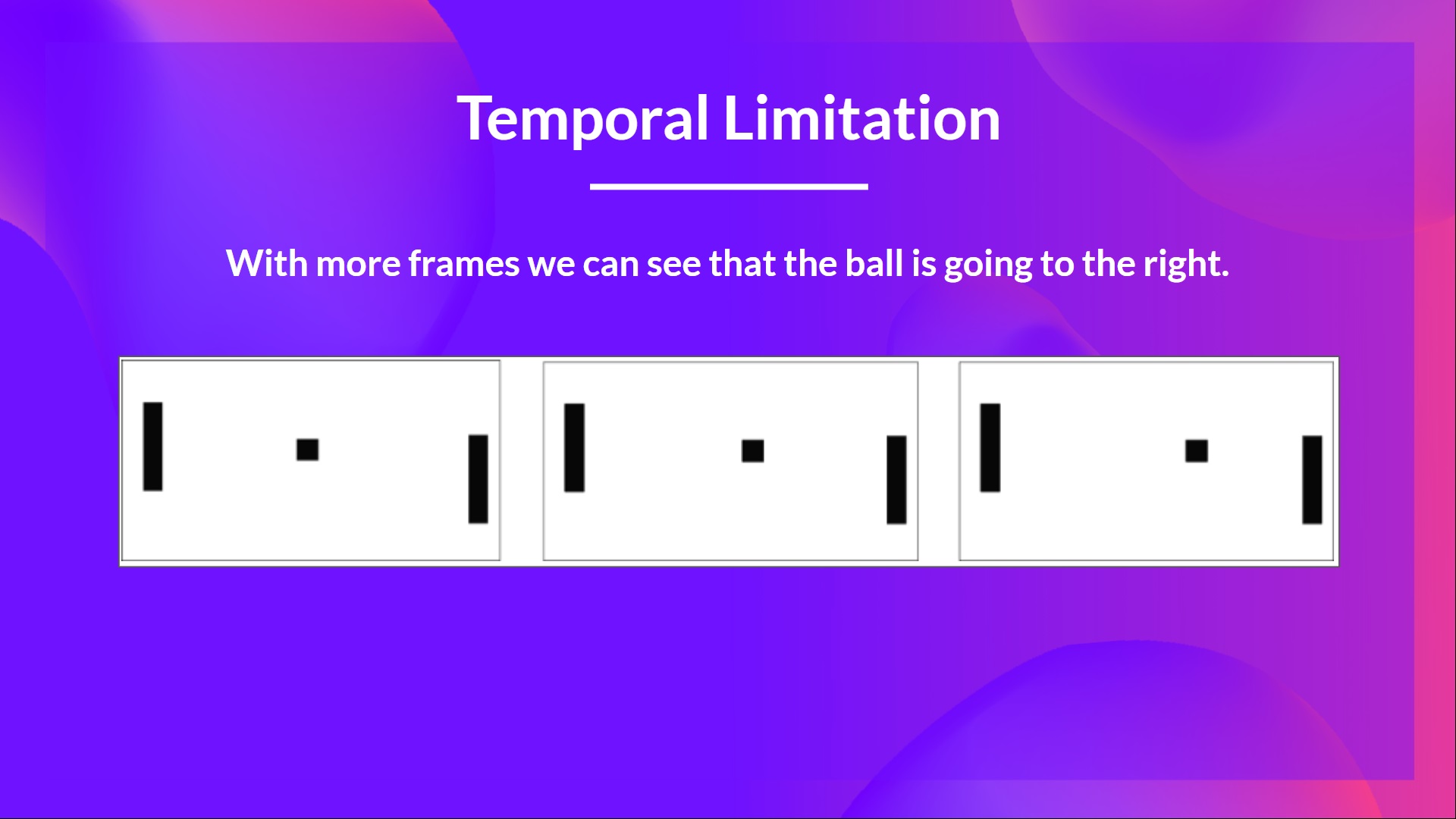

We stack frames together because it helps us handle the problem of temporal limitation: one frame is not enough to capture temporal information.

For instance, in pong, our agent will be unable to know the ball direction if it gets only one frame.

解决方案我们将帧堆叠在一起是因为它帮助我们处理时间限制的问题:一个帧不足以捕获时间信息。例如,在乒乓球比赛中,如果只有一帧,我们的代理将无法知道球的方向。

时间限制时间限制

Q4: What are the two phases of Deep Q-Learning?

问题4:深度问答学习的两个阶段是什么?

Sampling

抽样

Shuffling

洗牌

Reranking

重新排序

Training

培训

Q5: Why do we create a replay memory in Deep Q-Learning?

问题5:为什么我们要在深度问答学习中创造一段回放记忆?

Solution

1. Make more efficient use of the experiences during the training

解决方案1.更有效地利用培训过程中的经验

Usually, in online reinforcement learning, the agent interacts in the environment, gets experiences (state, action, reward, and next state), learns from them (updates the neural network), and discards them. This is not efficient.

But, with experience replay, we create a replay buffer that saves experience samples that we can reuse during the training.

通常,在在线强化学习中,代理在环境中交互,获得经验(状态、动作、奖励和下一个状态),从中学习(更新神经网络),然后丢弃它们。这不是很有效率。但是,通过经验回放,我们创建了一个回放缓冲区,其中保存了我们可以在培训期间重复使用的经验样本。

2. Avoid forgetting previous experiences and reduce the correlation between experiences

2.避免忘记以前的经历,减少经验之间的相关性

The problem we get if we give sequential samples of experiences to our neural network is that it tends to forget the previous experiences as it overwrites new experiences. For instance, if we are in the first level and then the second, which is different, our agent can forget how to behave and play in the first level.

如果我们给我们的神经网络提供连续的经验样本,我们得到的问题是,当它覆盖新的经验时,它往往会忘记以前的经验。例如,如果我们处于第一级,然后是第二级,这是不同的,我们的经纪人可能会忘记如何在第一级表现和发挥。

Q6: How do we use Double Deep Q-Learning?

问题6:我们如何使用双重深度Q-学习?

Solution

When we compute the Q target, we use two networks to decouple the action selection from the target Q value generation. We:

解决方案当我们计算Q目标时,我们使用两个网络将动作选择与目标Q值生成分离。我们:

- Use our DQN network to select the best action to take for the next state (the action with the highest Q value).

- Use our Target network to calculate the target Q value of taking that action at the next state.

Congrats on finishing this Quiz 🥳, if you missed some elements, take time to read again the chapter to reinforce (😏) your knowledge.

使用我们的DQN网络选择下一状态下要采取的最佳操作(具有最高Q值的操作)。使用我们的目标网络计算在下一状态采取该操作的目标Q值。祝贺您完成本测验🥳,如果您遗漏了一些元素,请花时间再次阅读本章以巩固(😏)您的知识。